Linear Modeling QC

Ittai Eres

2017-10-15

Last updated: 2018-09-28

Code version: e0ca9da

QC on QQ Plots

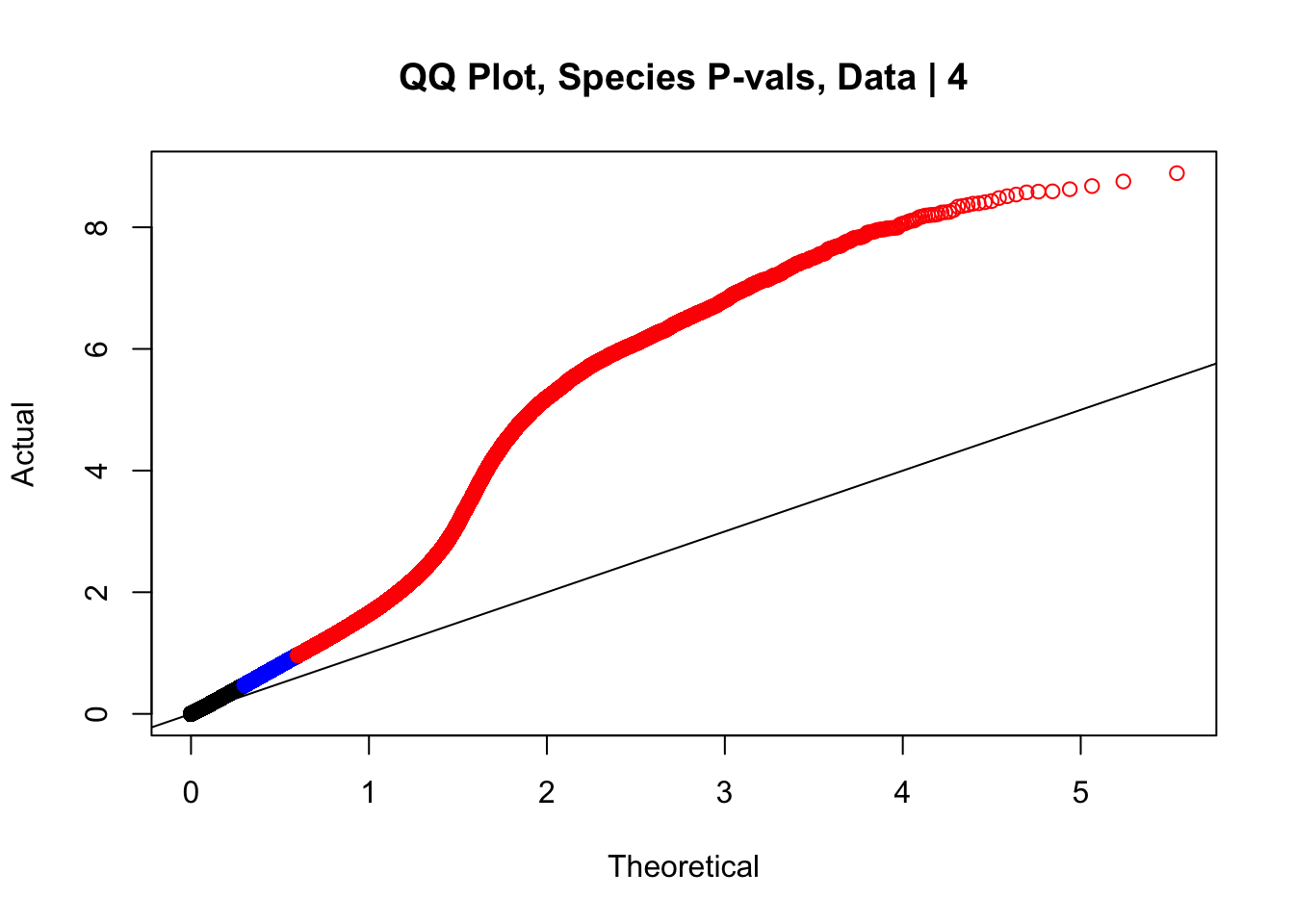

Having observed inflated p-values in QQ plots from doing linear modeling, as well as a stark asymmetry in the distribution of betas for the species term in the volcano plot, here I move to examine both issues. The QQ plot issue is not truly concerning, as we were not necessarily expecting normality in this set of hits enriched for significant Hi-C contacts. It seems likely that any set of significant Hi-C hits called independently in each species will have some inflation in the significance of a species term for linearly modeling the interaction frequencies. That the QQ plot does not look like a GWAS QQ plot is not truly concerning, however, just to be sure, first I will run a series of QQ plots once again after shuffling some of the metadata labels. This simply ensures the inflation seen is not an artifact of processing. I tackle the volcano plot asymmetry after alleviating QQ plot concerns and doing some further genomic filtering explained below.

#This file will examine first the inflation of p-values from the QQ plot at the end of linear_modeling.Rmd, and then the asymmetry seen in the volcano plots from linear modeling.

#First, grab the qqplotting function I utilize from linear_modeling.Rmd:

newqqplot=function(pvals, quant, title){

len = length(pvals)

res=qqplot(-log10((1:len)/(1+len)),pvals,plot.it=F)

plot(res$x,res$y, main=title, xlab="Theoretical", ylab="Actual", col=ifelse(res$y>as.numeric(quantile(res$y, quant[1])), ifelse(res$y>as.numeric(quantile(res$y, quant[2])), "red", "blue"), "black"))

abline(0, 1)

}

#Now, as always, read in data files modified by initial_QC.Rmd and linear_modeling.Rmd

full.data <- fread("~/Desktop/Hi-C/full.data.10.2018", header = TRUE, data.table=FALSE, stringsAsFactors = FALSE, showProgress = FALSE)

data.4 <- fread("~/Desktop/Hi-C/data.4.10.2018", header=TRUE, data.table=FALSE, stringsAsFactors = FALSE, showProgress = FALSE)

#This is the QQ plot for species from the linear model for Hi-C values from linear_modeling.Rmd. We can see a significant inflation of p-values here rising above the expected normal distribution alarmingly quickly:

newqqplot(-log10(data.4$sp_pval), c(0.5, 0.75), "QQ Plot, Species P-vals, Data | 4")

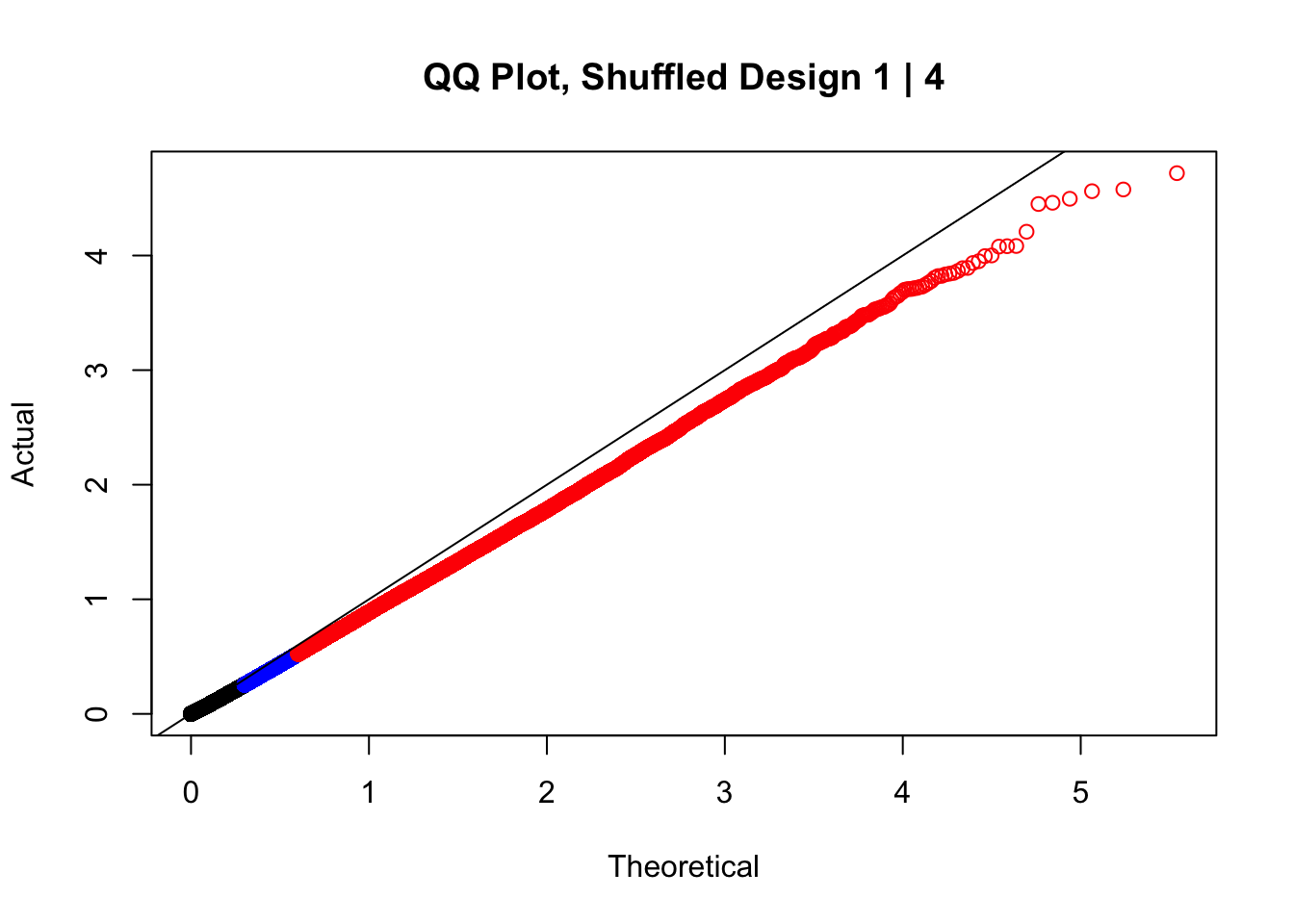

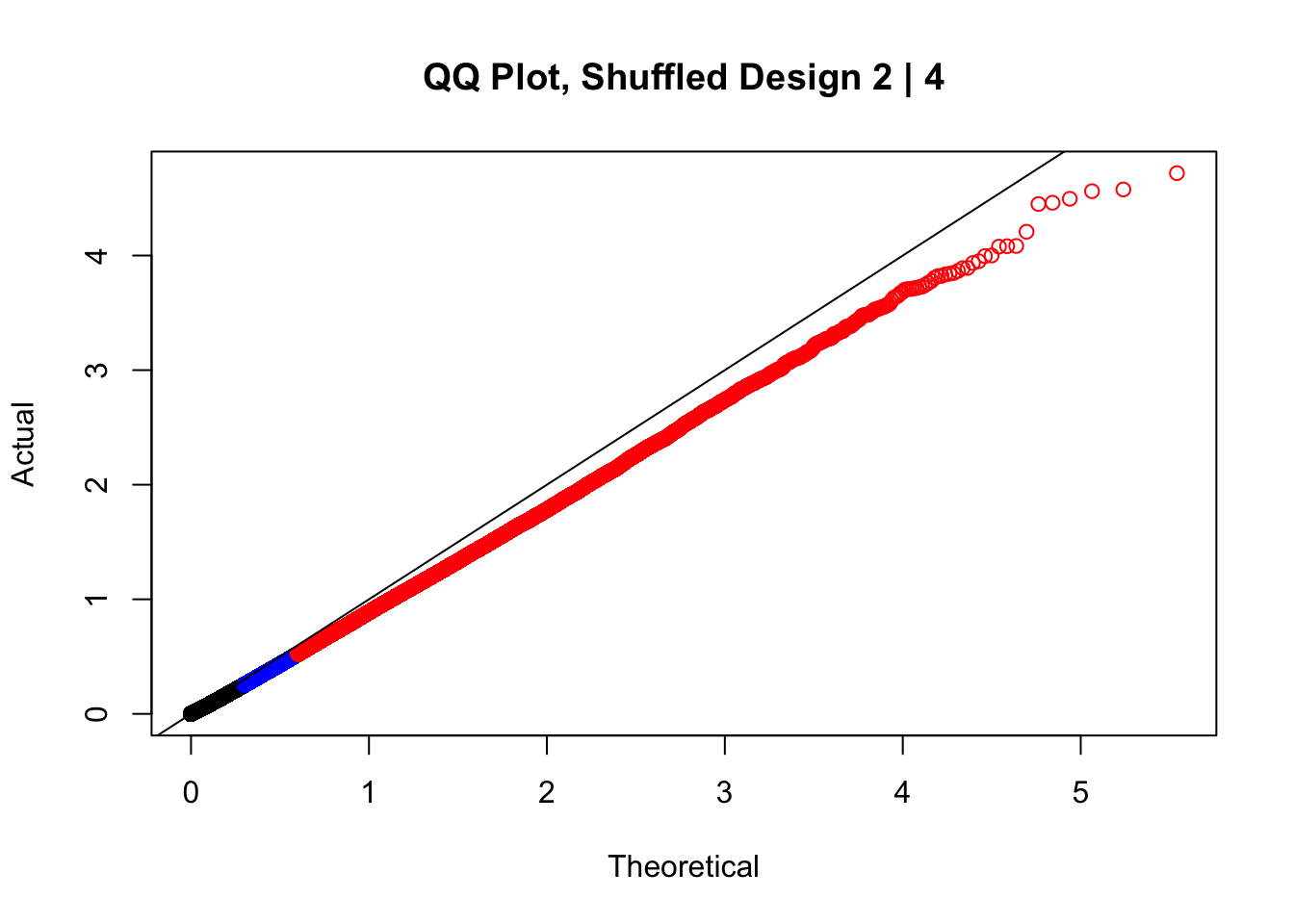

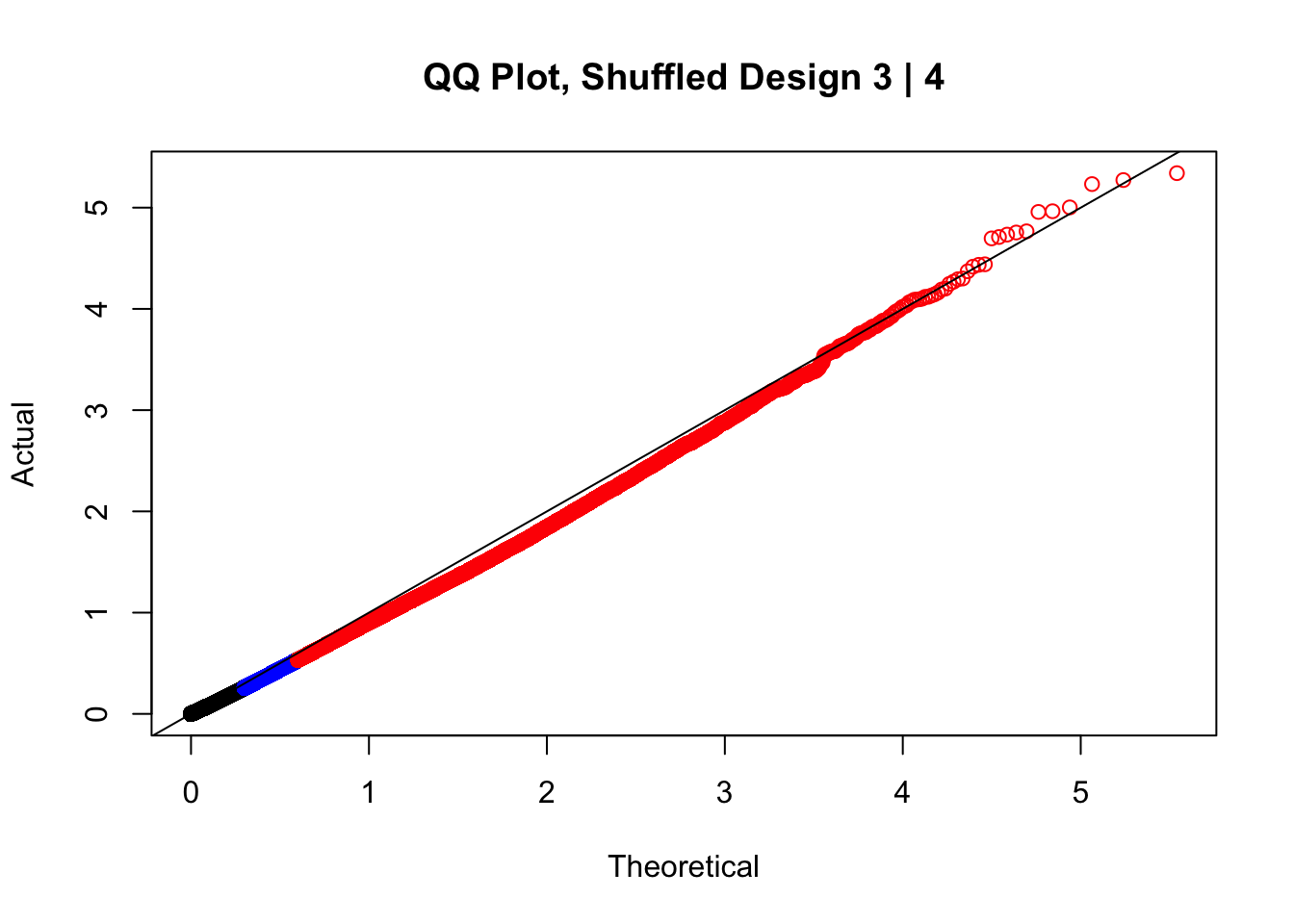

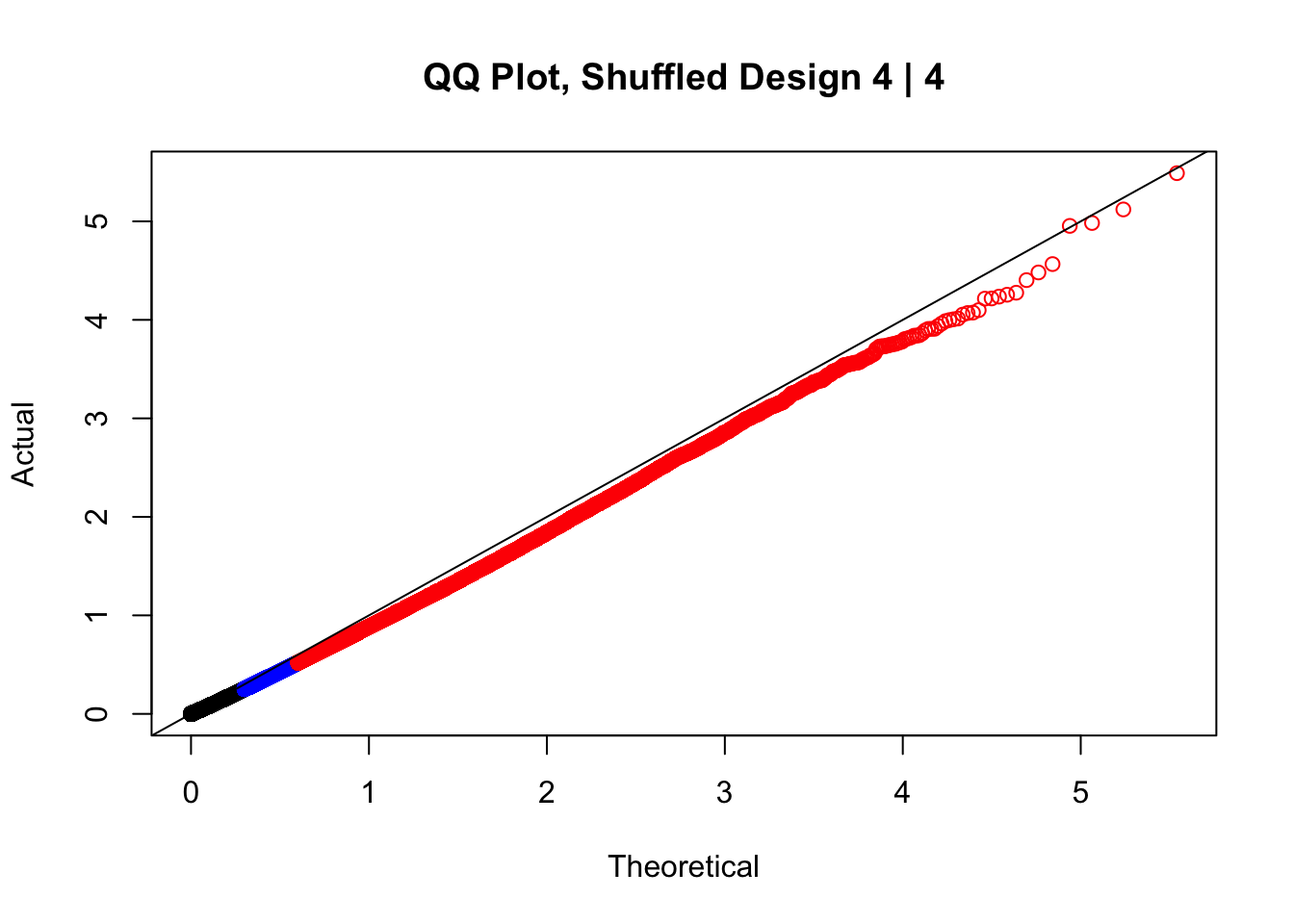

#In order to check that this extreme inflation of p values is not merely a technical artifact, here I try shuffling the species labels and running the linear model again. I would hope to see a more normal QQplot and this would perhaps suggest this the inflation seen is due to true biological effects, rather than technical factors. The fake designs just have some species swapped; the first two fake designs are balanced across sex and batch, and the second two are balanced with respect to batch (equal numbers of humans and chimps in both), but any sex-species interaction would be confounded with batch (since all members of a species in one batch are the same sex). Note that this ultimately shouldn't matter since I'm just for checking QQ normality here, especially since I'll start with the full model but then also remove batch and sex as covariates and re-check the QQ plot, to rule out overfitting.

fake.meta1 <- data.frame("SP"=c("H", "C", "H", "C", "H", "C", "C", "H"), "SX"=c("F", "M", "M", "F", "M", "F", "M", "F"), "Batch"=c(1, 1, 1, 1, 2, 2, 2, 2))

fake.meta2 <- data.frame("SP"=c("C", "H", "C", "H", "C", "H", "H", "C"), "SX"=c("F", "M", "M", "F", "M", "F", "M", "F"), "Batch"=c(1, 1, 1, 1, 2, 2, 2, 2))

fake.meta3 <- data.frame("SP"=c("H", "C", "C", "H", "C", "H", "C", "H"), "SX"=c("F", "M", "M", "F", "M", "F", "M", "F"), "Batch"=c(1, 1, 1, 1, 2, 2, 2, 2))

fake.meta4 <- data.frame("SP"=c("C", "H", "H", "C", "C", "H", "C", "H"), "SX"=c("F", "M", "M", "F", "M", "F", "M", "F"), "Batch"=c(1, 1, 1, 1, 2, 2, 2, 2))

#First, test out the fake metadataframess utilizing the linear model with all covariates included--species, sex, and batch.

lmFit(data.4[,304:311], model.matrix(~1+fake.meta1$SP+fake.meta1$SX+fake.meta1$Batch)) %>% eBayes(.) %>% topTable(., coef=2, sort.by="none", number=Inf) -> fake1

lmFit(data.4[,304:311], model.matrix(~1+fake.meta2$SP+fake.meta2$SX+fake.meta2$Batch)) %>% eBayes(.) %>% topTable(., coef=2, sort.by="none", number=Inf) -> fake2

lmFit(data.4[,304:311], model.matrix(~1+fake.meta3$SP+fake.meta3$SX+fake.meta3$Batch)) %>% eBayes(.) %>% topTable(., coef=2, sort.by="none", number=Inf) -> fake3Coefficients not estimable: fake.meta3$SXM Warning: Partial NA coefficients for 347206 probe(s)Warning in .ebayes(fit = fit, proportion = proportion, stdev.coef.lim =

stdev.coef.lim, : Estimation of var.prior failed - set to default valuelmFit(data.4[,304:311], model.matrix(~1+fake.meta4$SP+fake.meta4$SX+fake.meta4$Batch)) %>% eBayes(.) %>% topTable(., coef=2, sort.by="none", number=Inf) -> fake4

newqqplot(-log10(fake1$P.Value), c(0.5, 0.75), "QQ Plot, Shuffled Design 1 | 4")

newqqplot(-log10(fake2$P.Value), c(0.5, 0.75), "QQ Plot, Shuffled Design 2 | 4")

newqqplot(-log10(fake3$P.Value), c(0.5, 0.75), "QQ Plot, Shuffled Design 3 | 4")

newqqplot(-log10(fake4$P.Value), c(0.5, 0.75), "QQ Plot, Shuffled Design 4 | 4")

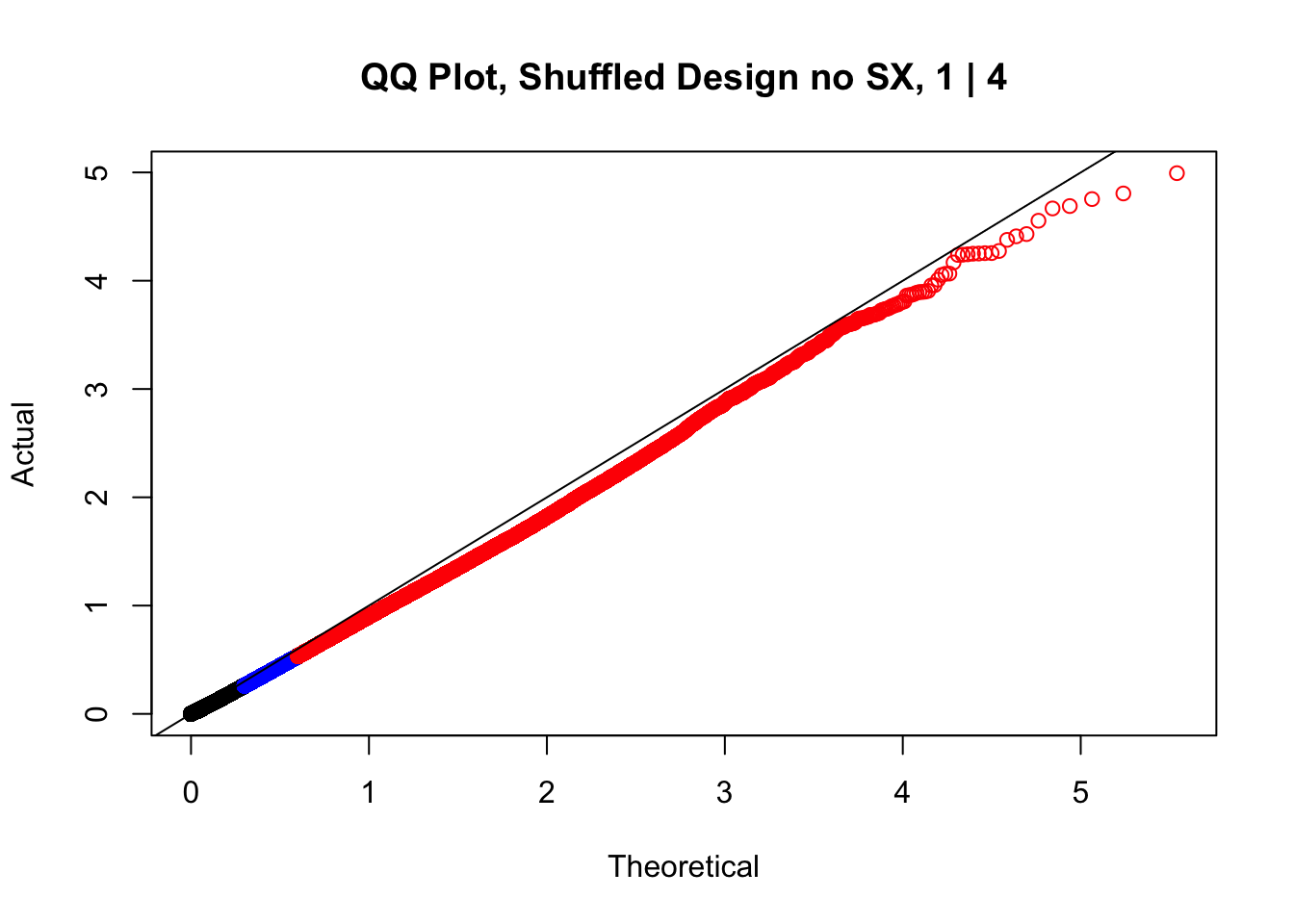

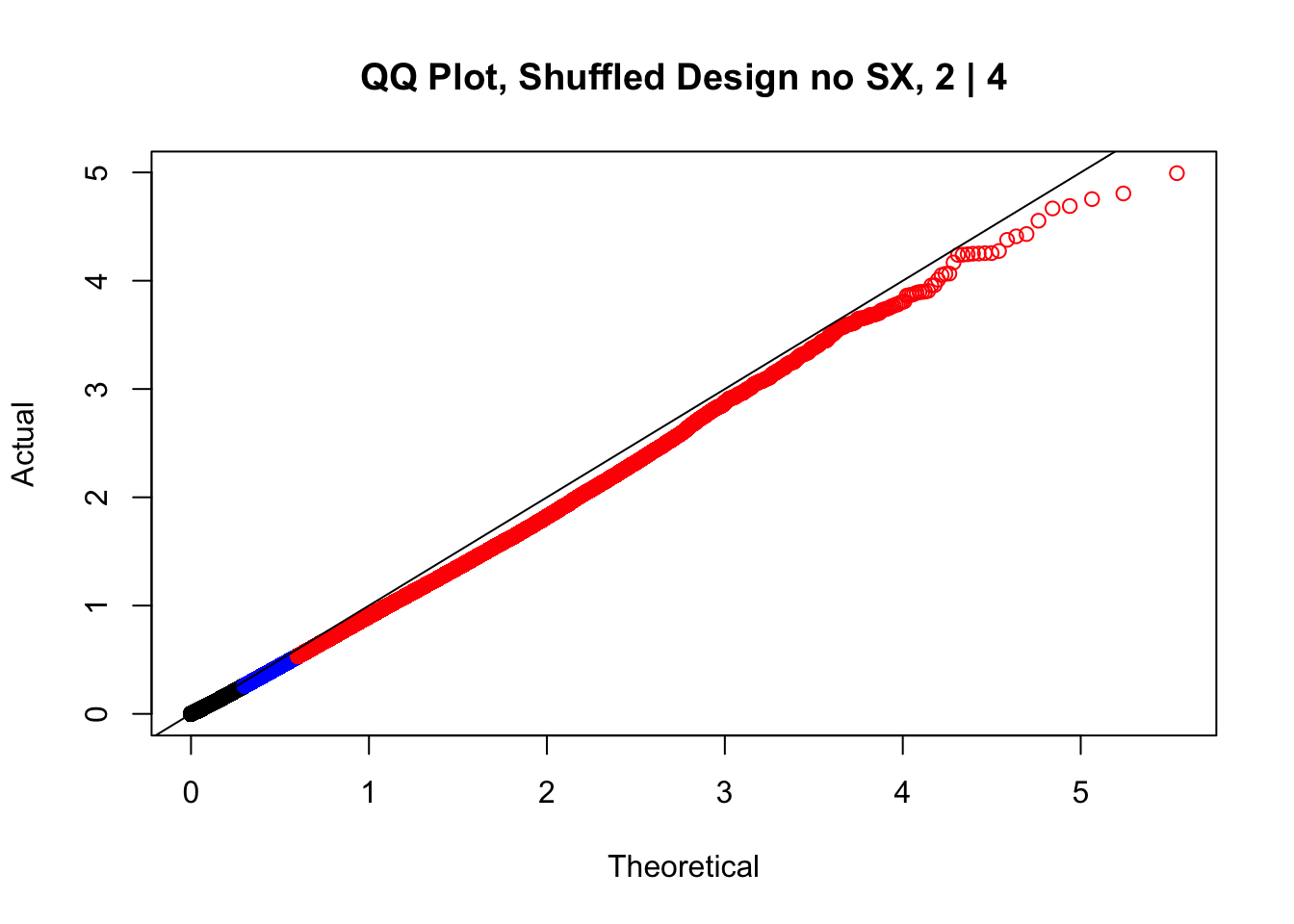

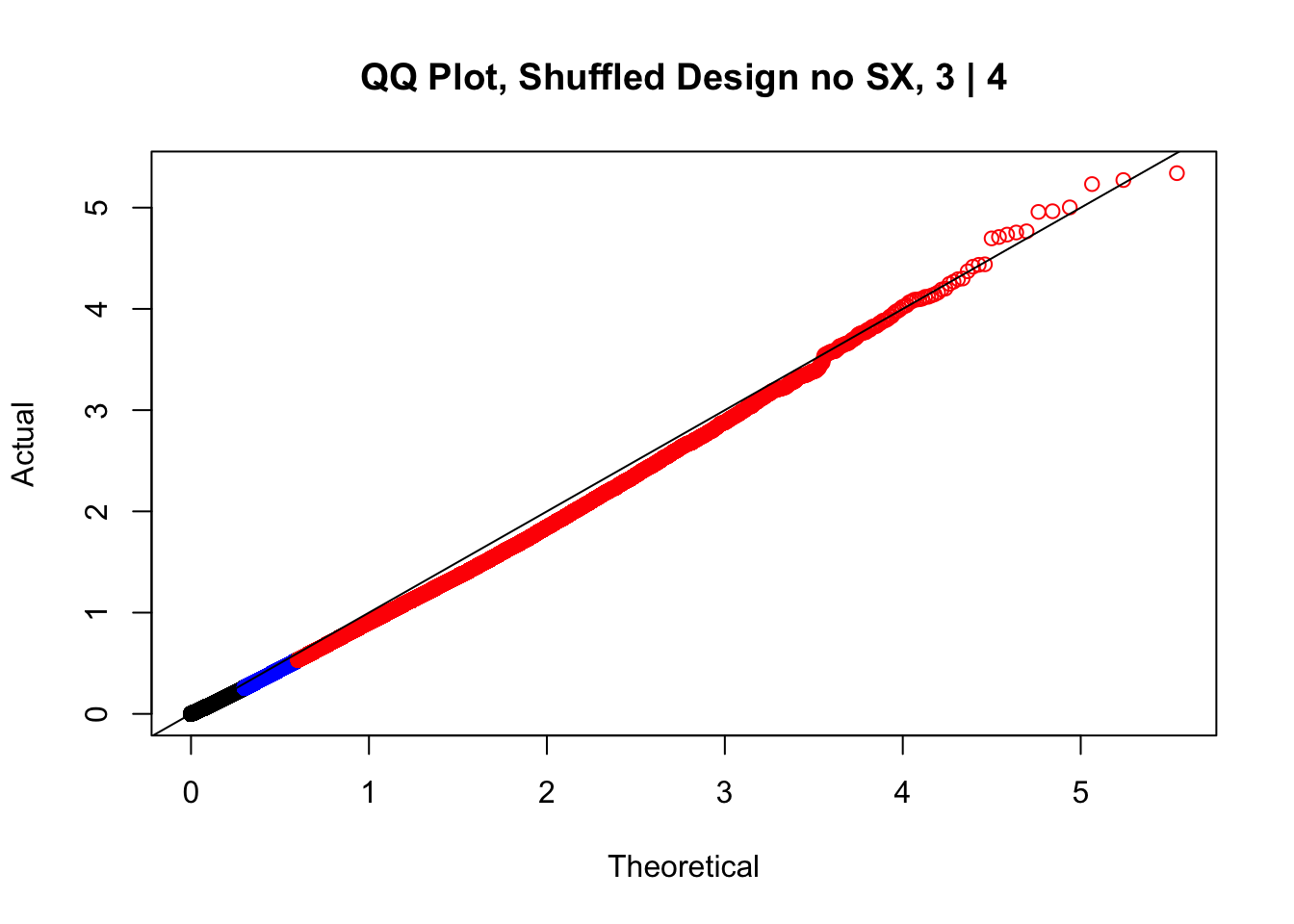

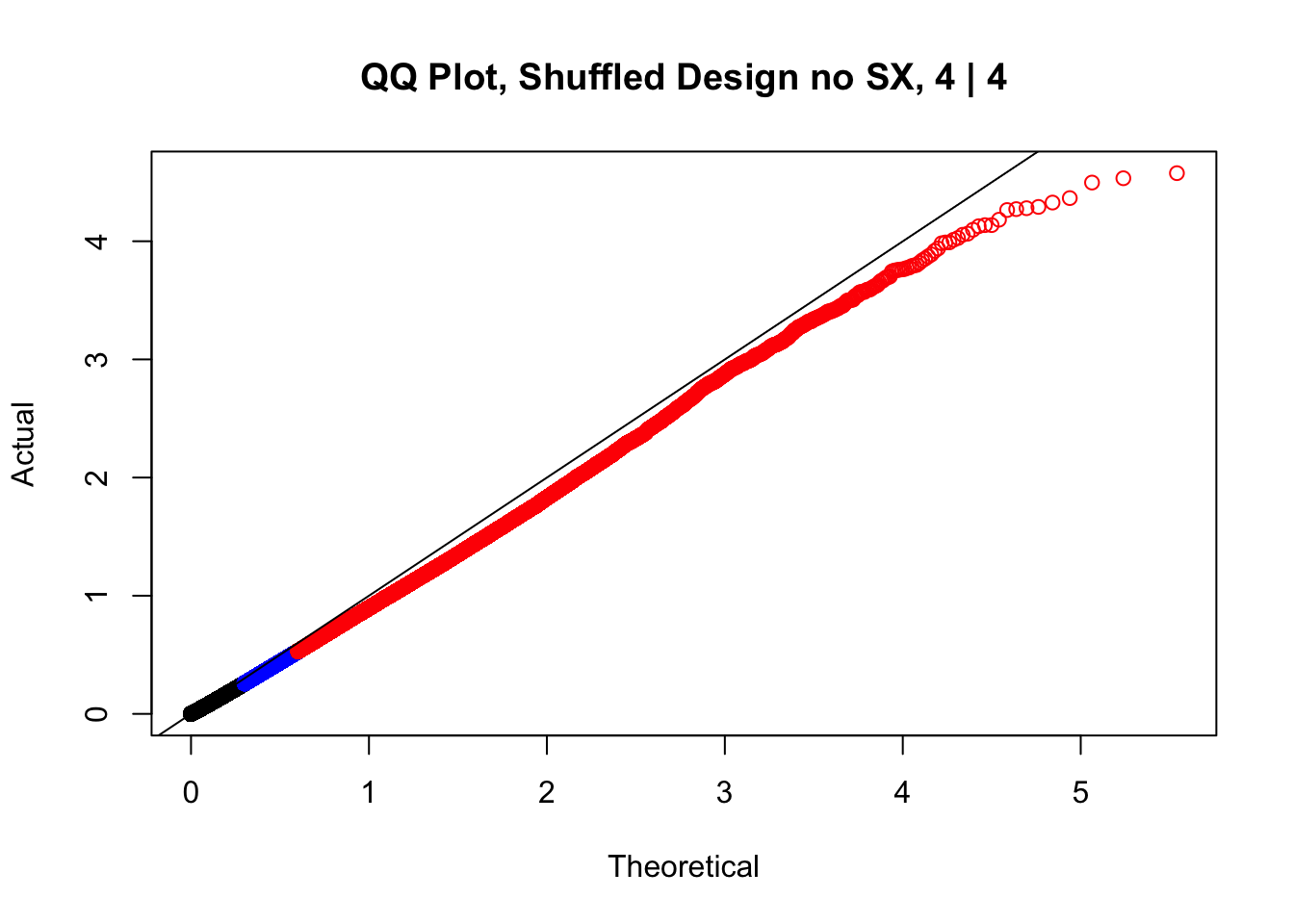

#While these QQ plots are nice in that they no longer show inflation of p-values quickly, many of them show slight deflation. Here I try doing the same thing again but without sex and then without batch, and then without both, as covariates--to account for the possibility that inclusion of these covariates in the model is overfitting:

lmFit(data.4[,304:311], model.matrix(~1+fake.meta1$SP+fake.meta1$Batch)) %>% eBayes(.) %>% topTable(., coef=2, sort.by="none", number=Inf) -> fake1

lmFit(data.4[,304:311], model.matrix(~1+fake.meta2$SP+fake.meta2$Batch)) %>% eBayes(.) %>% topTable(., coef=2, sort.by="none", number=Inf) -> fake2

lmFit(data.4[,304:311], model.matrix(~1+fake.meta3$SP+fake.meta3$Batch)) %>% eBayes(.) %>% topTable(., coef=2, sort.by="none", number=Inf) -> fake3

lmFit(data.4[,304:311], model.matrix(~1+fake.meta4$SP+fake.meta4$Batch)) %>% eBayes(.) %>% topTable(., coef=2, sort.by="none", number=Inf) -> fake4

newqqplot(-log10(fake1$P.Value), c(0.5, 0.75), "QQ Plot, Shuffled Design no SX, 1 | 4")

newqqplot(-log10(fake2$P.Value), c(0.5, 0.75), "QQ Plot, Shuffled Design no SX, 2 | 4")

newqqplot(-log10(fake3$P.Value), c(0.5, 0.75), "QQ Plot, Shuffled Design no SX, 3 | 4")

newqqplot(-log10(fake4$P.Value), c(0.5, 0.75), "QQ Plot, Shuffled Design no SX, 4 | 4")

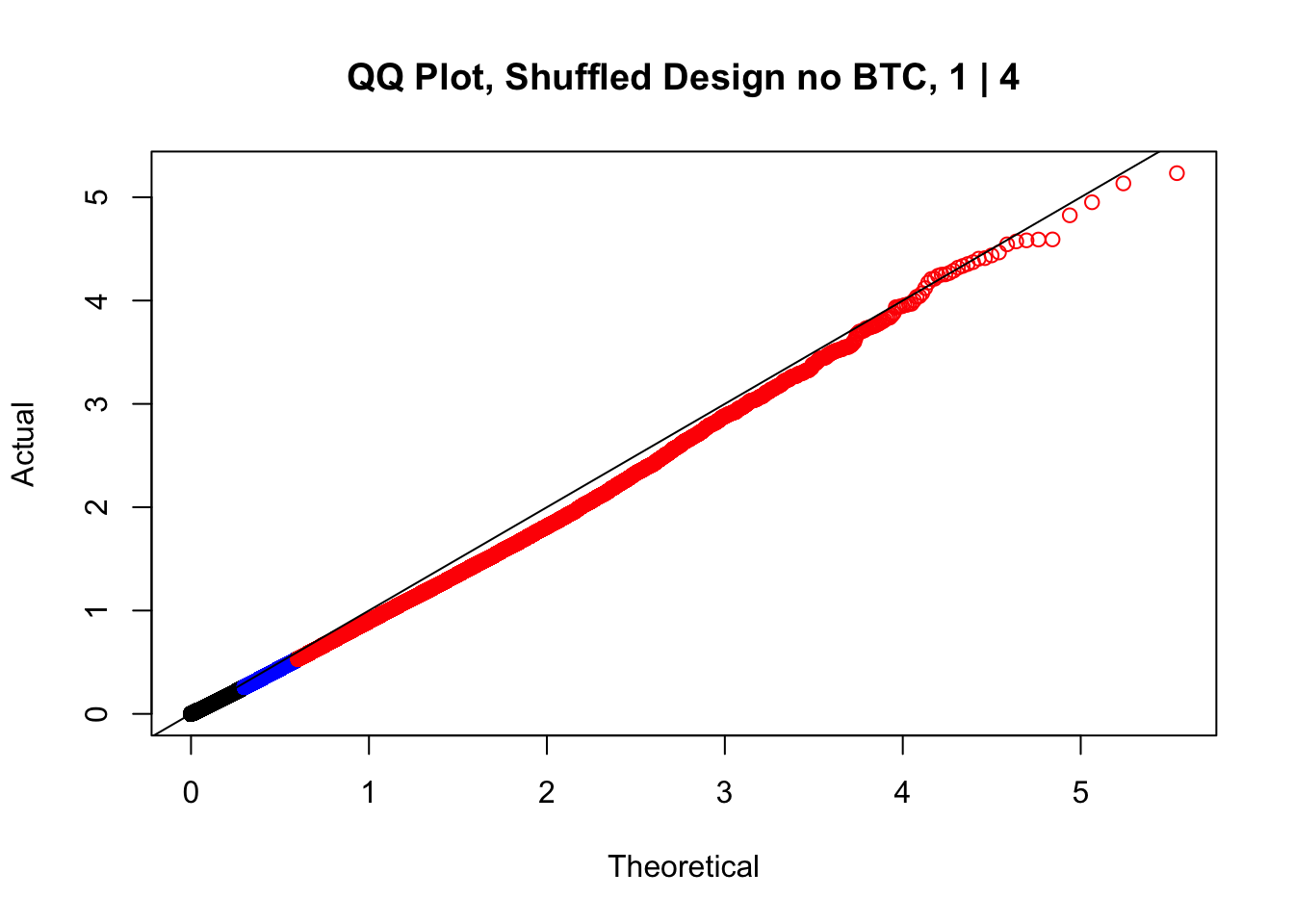

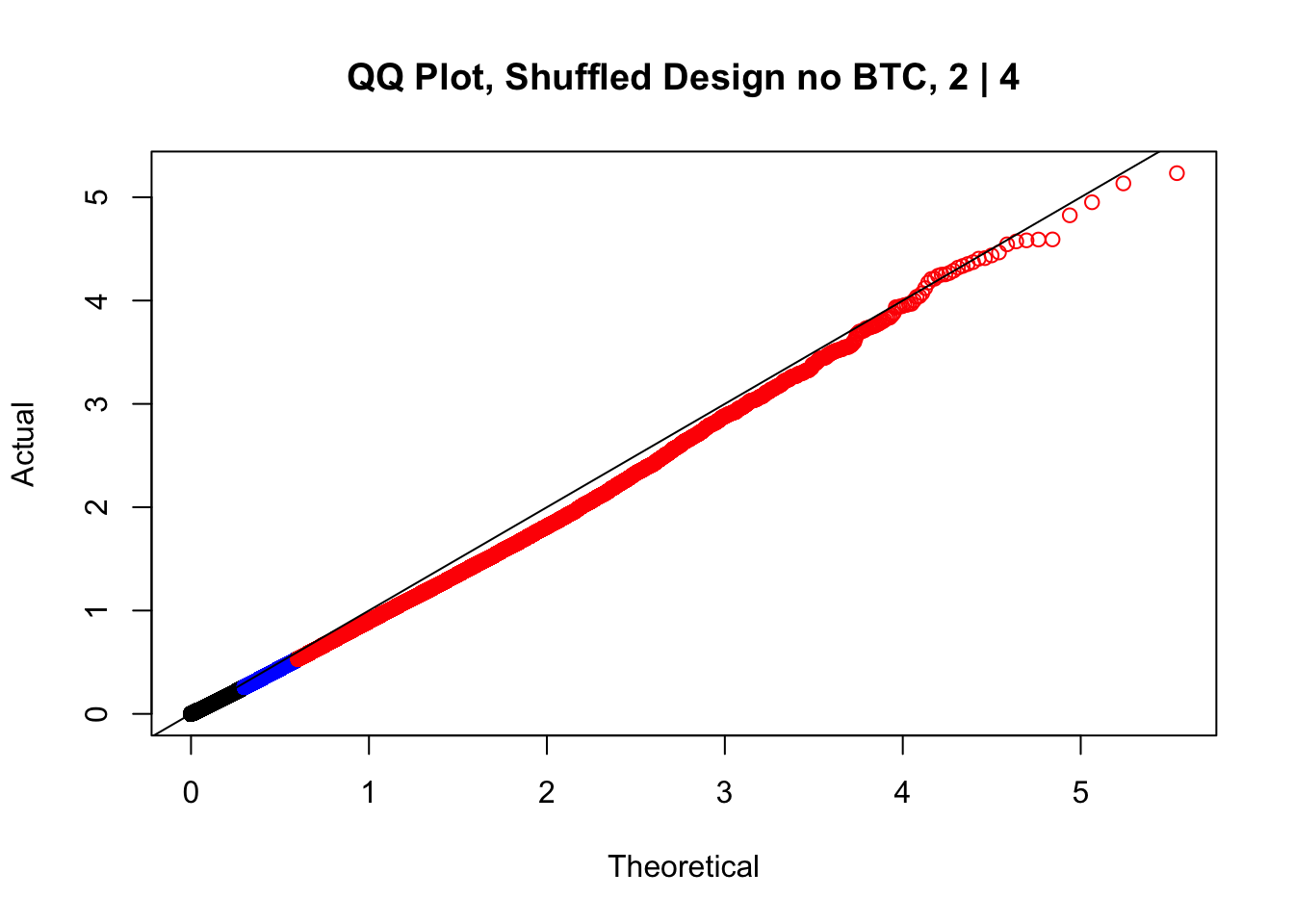

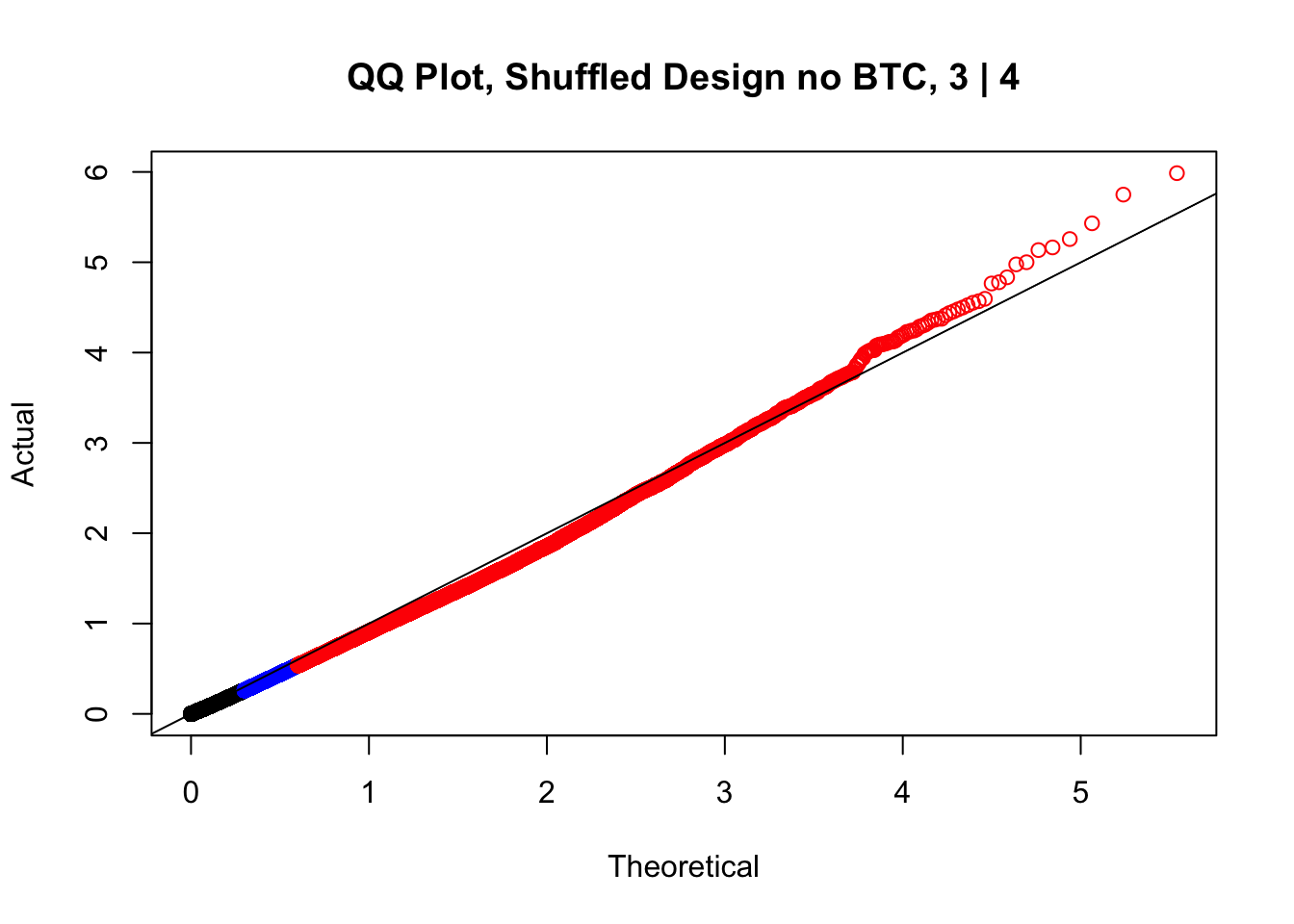

lmFit(data.4[,304:311], model.matrix(~1+fake.meta1$SP+fake.meta1$SX)) %>% eBayes(.) %>% topTable(., coef=2, sort.by="none", number=Inf) -> fake1

lmFit(data.4[,304:311], model.matrix(~1+fake.meta2$SP+fake.meta2$SX)) %>% eBayes(.) %>% topTable(., coef=2, sort.by="none", number=Inf) -> fake2

lmFit(data.4[,304:311], model.matrix(~1+fake.meta3$SP+fake.meta3$SX)) %>% eBayes(.) %>% topTable(., coef=2, sort.by="none", number=Inf) -> fake3Coefficients not estimable: fake.meta3$SXM Warning: Partial NA coefficients for 347206 probe(s)

Warning: Estimation of var.prior failed - set to default valuelmFit(data.4[,304:311], model.matrix(~1+fake.meta4$SP+fake.meta4$SX)) %>% eBayes(.) %>% topTable(., coef=2, sort.by="none", number=Inf) -> fake4

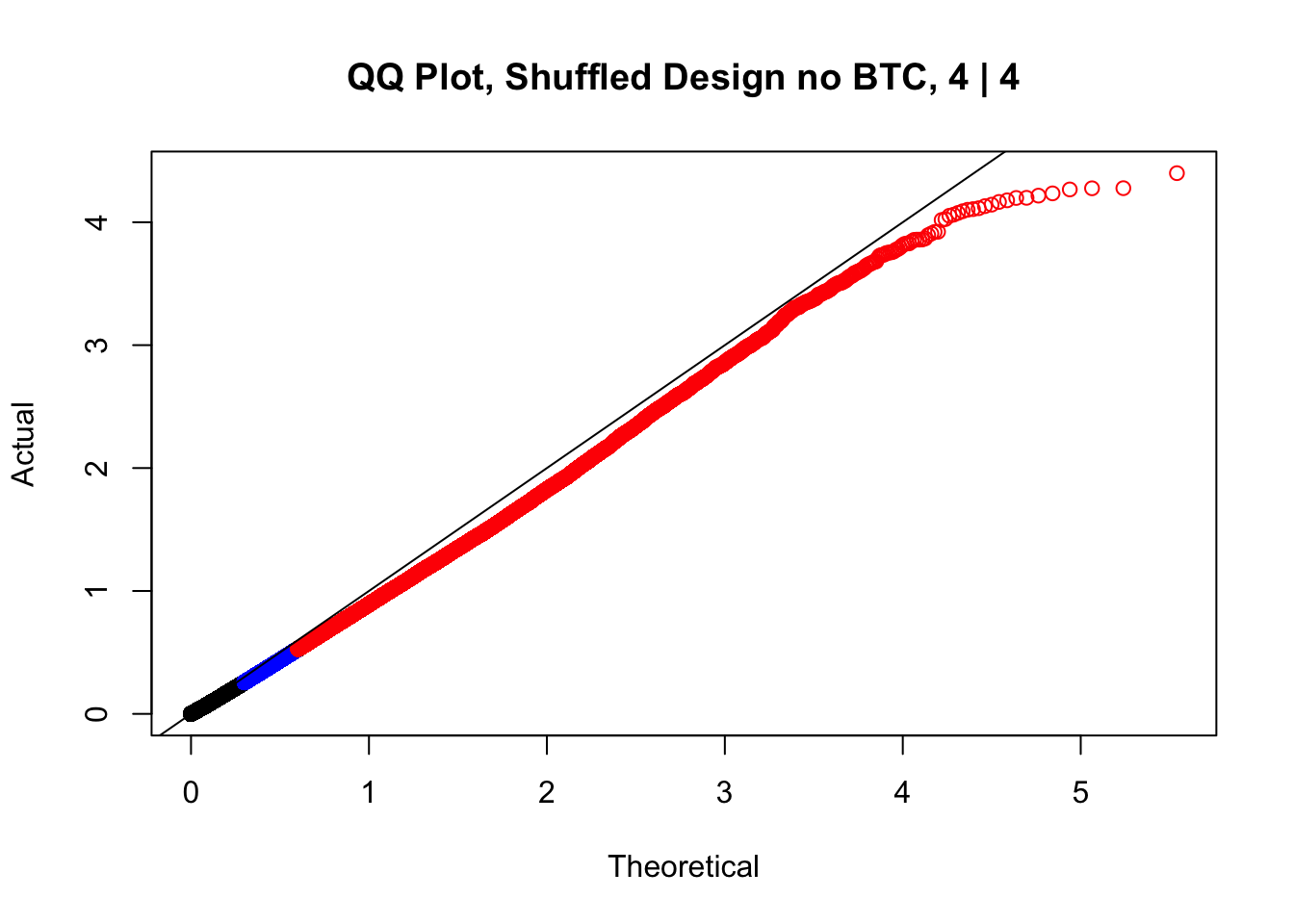

newqqplot(-log10(fake1$P.Value), c(0.5, 0.75), "QQ Plot, Shuffled Design no BTC, 1 | 4")

newqqplot(-log10(fake2$P.Value), c(0.5, 0.75), "QQ Plot, Shuffled Design no BTC, 2 | 4")

newqqplot(-log10(fake3$P.Value), c(0.5, 0.75), "QQ Plot, Shuffled Design no BTC, 3 | 4")

newqqplot(-log10(fake4$P.Value), c(0.5, 0.75), "QQ Plot, Shuffled Design no BTC, 4 | 4")

lmFit(data.4[,304:311], model.matrix(~1+fake.meta1$SP)) %>% eBayes(.) %>% topTable(., coef=2, sort.by="none", number=Inf) -> fake1

lmFit(data.4[,304:311], model.matrix(~1+fake.meta2$SP)) %>% eBayes(.) %>% topTable(., coef=2, sort.by="none", number=Inf) -> fake2

lmFit(data.4[,304:311], model.matrix(~1+fake.meta3$SP)) %>% eBayes(.) %>% topTable(., coef=2, sort.by="none", number=Inf) -> fake3

lmFit(data.4[,304:311], model.matrix(~1+fake.meta4$SP)) %>% eBayes(.) %>% topTable(., coef=2, sort.by="none", number=Inf) -> fake4

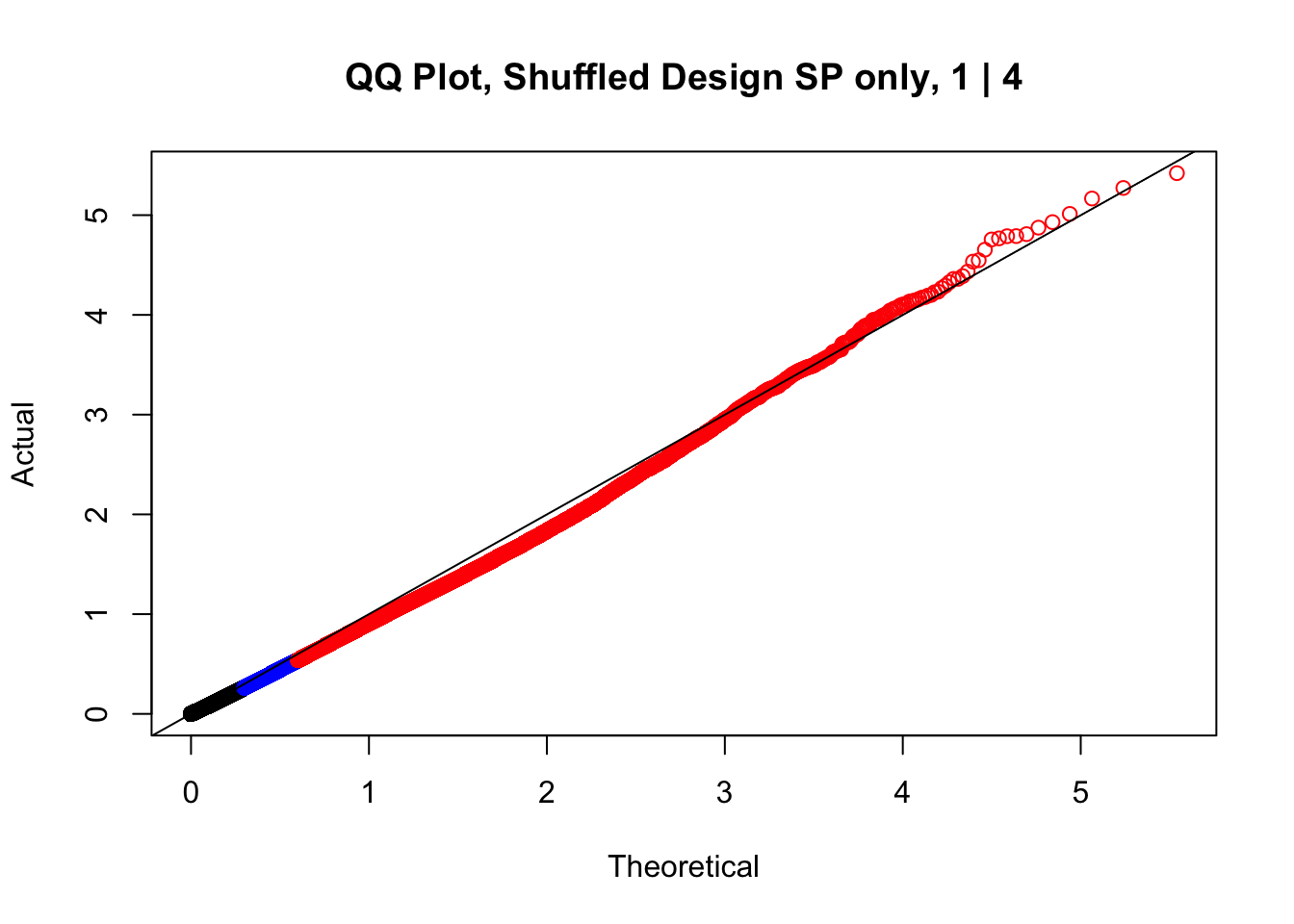

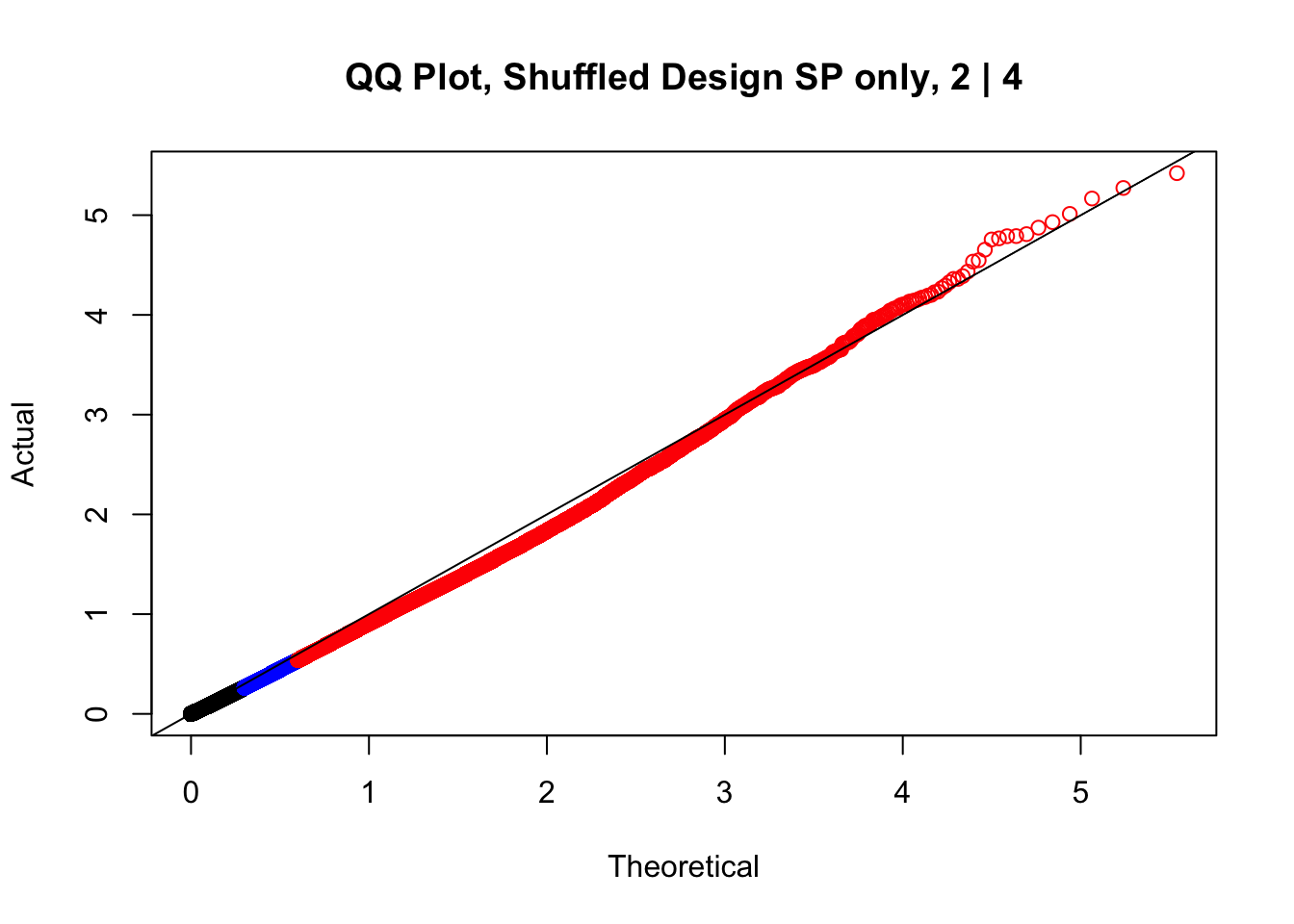

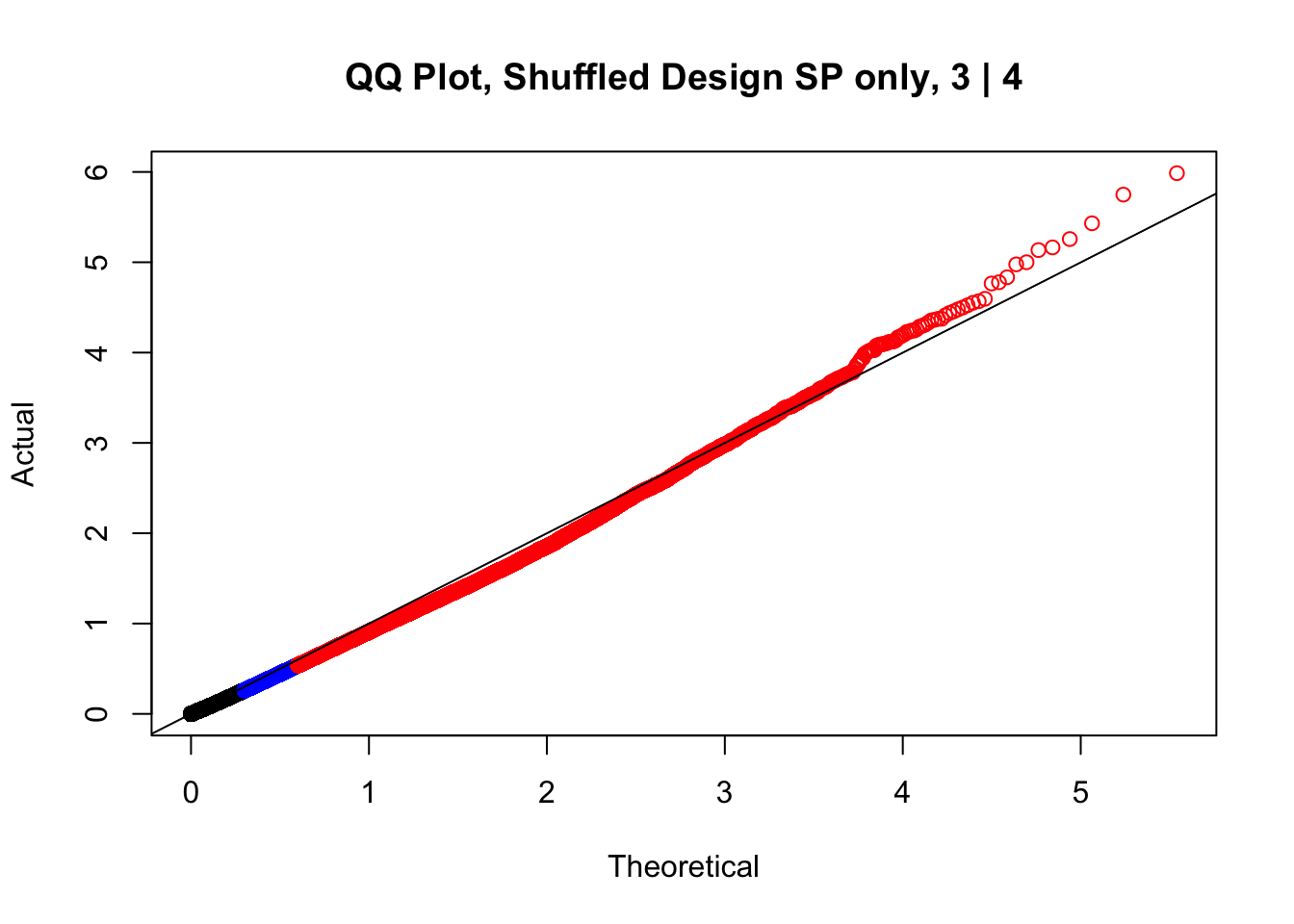

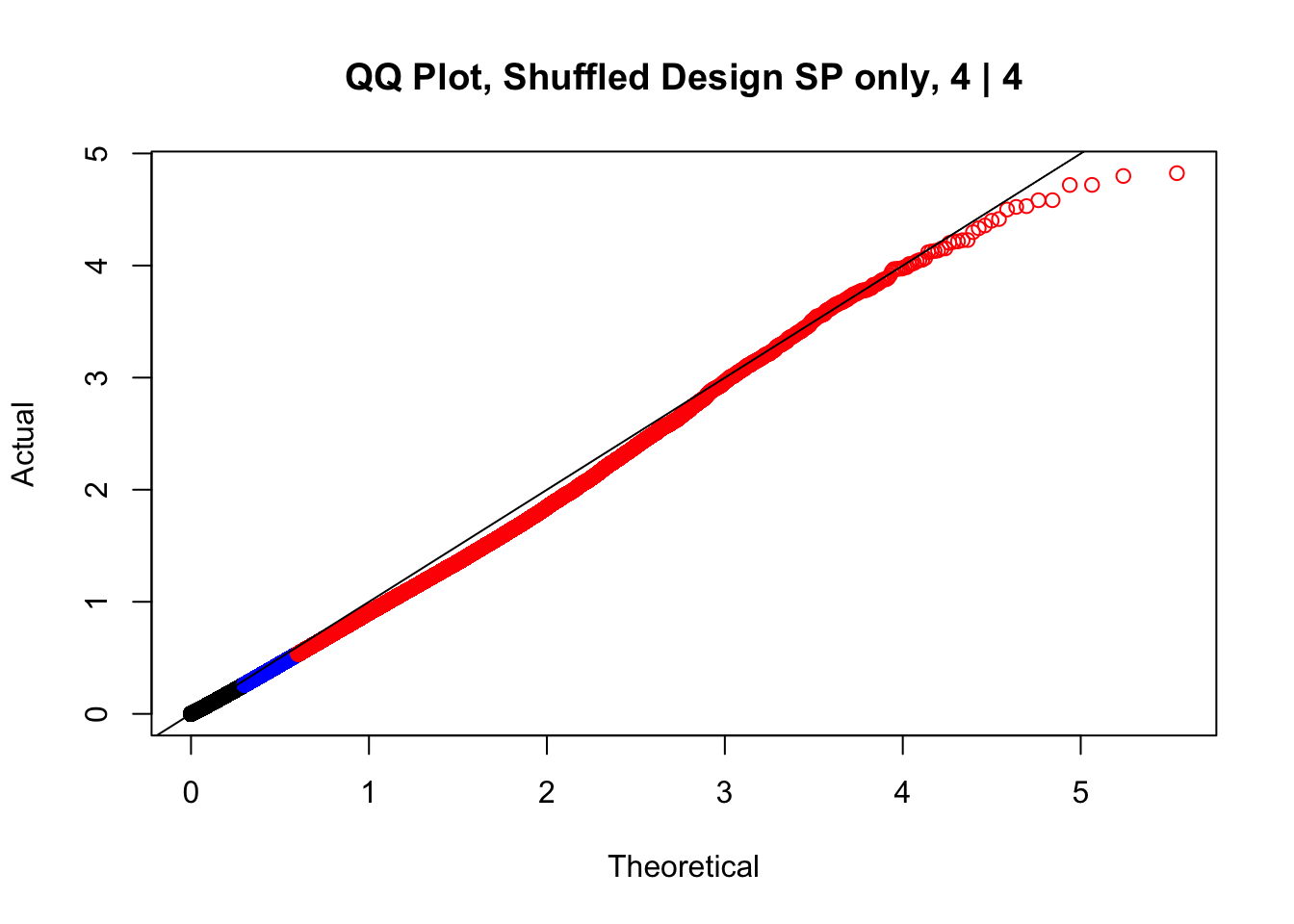

newqqplot(-log10(fake1$P.Value), c(0.5, 0.75), "QQ Plot, Shuffled Design SP only, 1 | 4")

newqqplot(-log10(fake2$P.Value), c(0.5, 0.75), "QQ Plot, Shuffled Design SP only, 2 | 4")

newqqplot(-log10(fake3$P.Value), c(0.5, 0.75), "QQ Plot, Shuffled Design SP only, 3 | 4")

newqqplot(-log10(fake4$P.Value), c(0.5, 0.75), "QQ Plot, Shuffled Design SP only, 4 | 4") These are slightly better, but many show some small degree of deflation. After looking at all these QQ plots concerns about p-value inflation are fairly assuaged, and it’s again worth noting that there was no real cause for concern in the first place. Once again, I pulled out significant Hi-C hits from each species, so it makes sense that this set of hits might already be enriched for strong species differences. I conclude that the signal seen in the QQ plot is not an artifact of making incorrect assumptions in linear modeling.

These are slightly better, but many show some small degree of deflation. After looking at all these QQ plots concerns about p-value inflation are fairly assuaged, and it’s again worth noting that there was no real cause for concern in the first place. Once again, I pulled out significant Hi-C hits from each species, so it makes sense that this set of hits might already be enriched for strong species differences. I conclude that the signal seen in the QQ plot is not an artifact of making incorrect assumptions in linear modeling.

Genomic Differences

However, we might still be concerned that some of the observed differences are not truly driven by biology, but due to differences in genome builds or other issues induced by liftOver. I now add several other metrics measuring differences in bin sizes and pair distances between humans and chimps, to help identify differences that may be primarily driven by mapping of orthology or different genome builds rather than true biology. I will then subset the QQ plots to particular classes of these hits and see if any pattern emerges. This utilizes the normal set of p-values from the original design conditioned upon discovery in 4 individuals. I also place hits into classes based on differences in bin size or distance between pair mates, and use a 3-dimensional plot to see if any class(es) of hits show strong inflation in their species p-values from linear modeling. This represents one of the final filtering steps.

#To do this, I first have to pull out the mean start and end positions of bins for each of the species from the data:

H1startCmean <- rowMeans(data.4[,c('Hstart1-C', 'Hstart1-D', 'Hstart1-G', 'Hstart1-H')], na.rm=TRUE)

H1endCmean <- rowMeans(data.4[,c('Hend1-C', 'Hend1-D', 'Hend1-G', 'Hend1-H')], na.rm=TRUE)

H2startCmean <- rowMeans(data.4[,c('Hstart2-C', 'Hstart2-D', 'Hstart2-G', 'Hstart2-H')], na.rm=TRUE)

H2endCmean <- rowMeans(data.4[,c('Hend2-C', 'Hend2-D', 'Hend2-G', 'Hend2-H')], na.rm=TRUE)

C1startHmean <- rowMeans(data.4[,c('Cstart1-A', 'Cstart1-B', 'Cstart1-E', 'Cstart1-F')], na.rm=TRUE) #this is identical and easier.

C1endHmean <- rowMeans(data.4[,c('Cend1-A', 'Cend1-B', 'Cend1-E', 'Cend1-F')], na.rm=TRUE)

C2startHmean <- rowMeans(data.4[,c('Cstart2-A', 'Cstart2-B', 'Cstart2-E', 'Cstart2-F')], na.rm=TRUE)

C2endHmean <- rowMeans(data.4[,c('Cend2-A', 'Cend2-B', 'Cend2-E', 'Cend2-F')], na.rm=TRUE)

#Now, I use these data to add columns to the data frame for the sizes of bins and distance between bins. Note that I am only really looking at differences in the size of the "orthologous" bins called through liftover, as compared to their original size (10kb). Bin size differences in the values at the start of each individual's portion of the data frame for coordinates matching that species are merely due to size differences in reciprocal best hits liftover. The true size of bins within their own species is always 10kb. So here bin sizes being appended to the data frame are for lifted-over bins. I do the distance differences based on the HC-pair values (H1/H2 and C1/C2) that have been rounded to the nearest 10kb from the original values given by homer; since this should be balanced across species it shouldn't matter much. Worst case it would make the estimate off by 20kb, maximum.

H1sizeC <- H1endCmean-H1startCmean

H2sizeC <- H2endCmean-H2startCmean

data.4$H1diff <- abs(10000-H1sizeC)

data.4$H2diff <- abs(10000-H2sizeC)

C1sizeH <- C1endHmean-C1startHmean

C2sizeH <- C2endHmean-C2startHmean

data.4$C1diff <- abs(10000-C1sizeH)

data.4$C2diff <- abs(10000-C2sizeH)

data.4$Hdist <- abs(as.numeric(sub(".*-", "", data.4$H2))-as.numeric(sub(".*-", "", data.4$H1)))

data.4$Cdist <- abs(as.numeric(sub(".*-", "", data.4$C2))-as.numeric(sub(".*-", "", data.4$C1)))

data.4$dist_diff <- abs(data.4$Hdist-data.4$Cdist)

#Now I look at the distributions of some of these metrics to inform me about how best to bin the data for filtering and further QC checking.

quantile(data.4$H1diff, na.rm=TRUE) 0% 25% 50% 75% 100%

0 9 21 51 1094 quantile(data.4$H2diff, na.rm=TRUE) 0% 25% 50% 75% 100%

0 8 21 51 1101 quantile(data.4$C1diff, na.rm=TRUE) 0% 25% 50% 75% 100%

0 9 21 50 1096 quantile(data.4$C2diff, na.rm=TRUE) 0% 25% 50% 75% 100%

0 8 21 50 1098 quantile(data.4$dist_diff, na.rm=TRUE) 0% 25% 50% 75% 100%

0 0 0 20000 77730000 #From this I can see that the majority of bin size differences are relatively small (<25 bp), and the majority of hits do not have a difference in distance between the mates in a pair across the species (all the way up to 50th percentile the distance difference is still 0). Now I'll take a look at some QQ plots filtering along these values to get an idea of if any of these technical orthology-calling artifacts are driving the inflation we see.

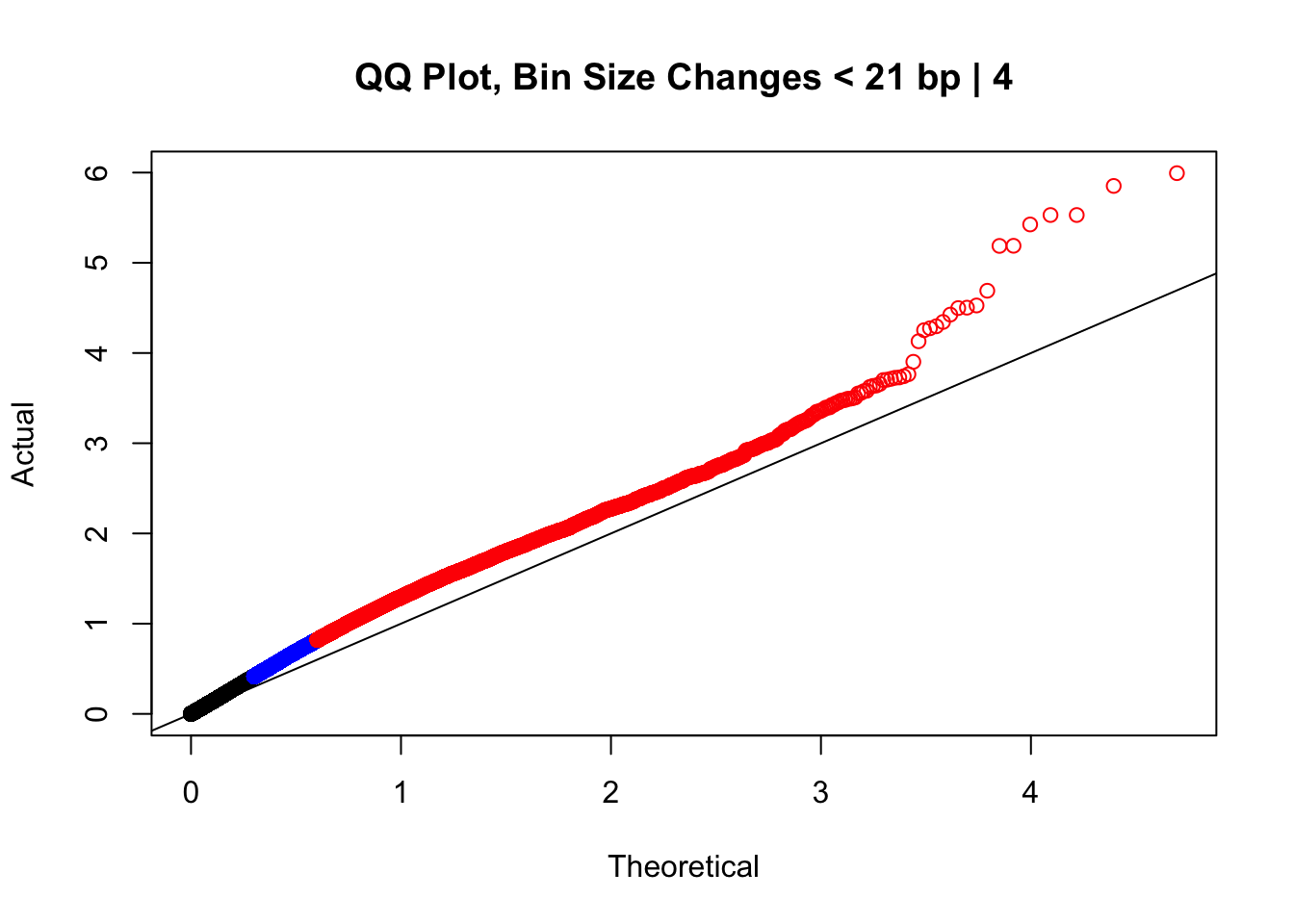

newqqplot(-log10(filter(data.4, H1diff<21&H2diff<21&C1diff<21&C2diff<21)$sp_pval), c(0.5, 0.75), "QQ Plot, Bin Size Changes < 21 bp | 4") #We can see that the QQplot looks a bit better if we only utilize hits where the bin size difference is less than the 50% percentile of their distribution.

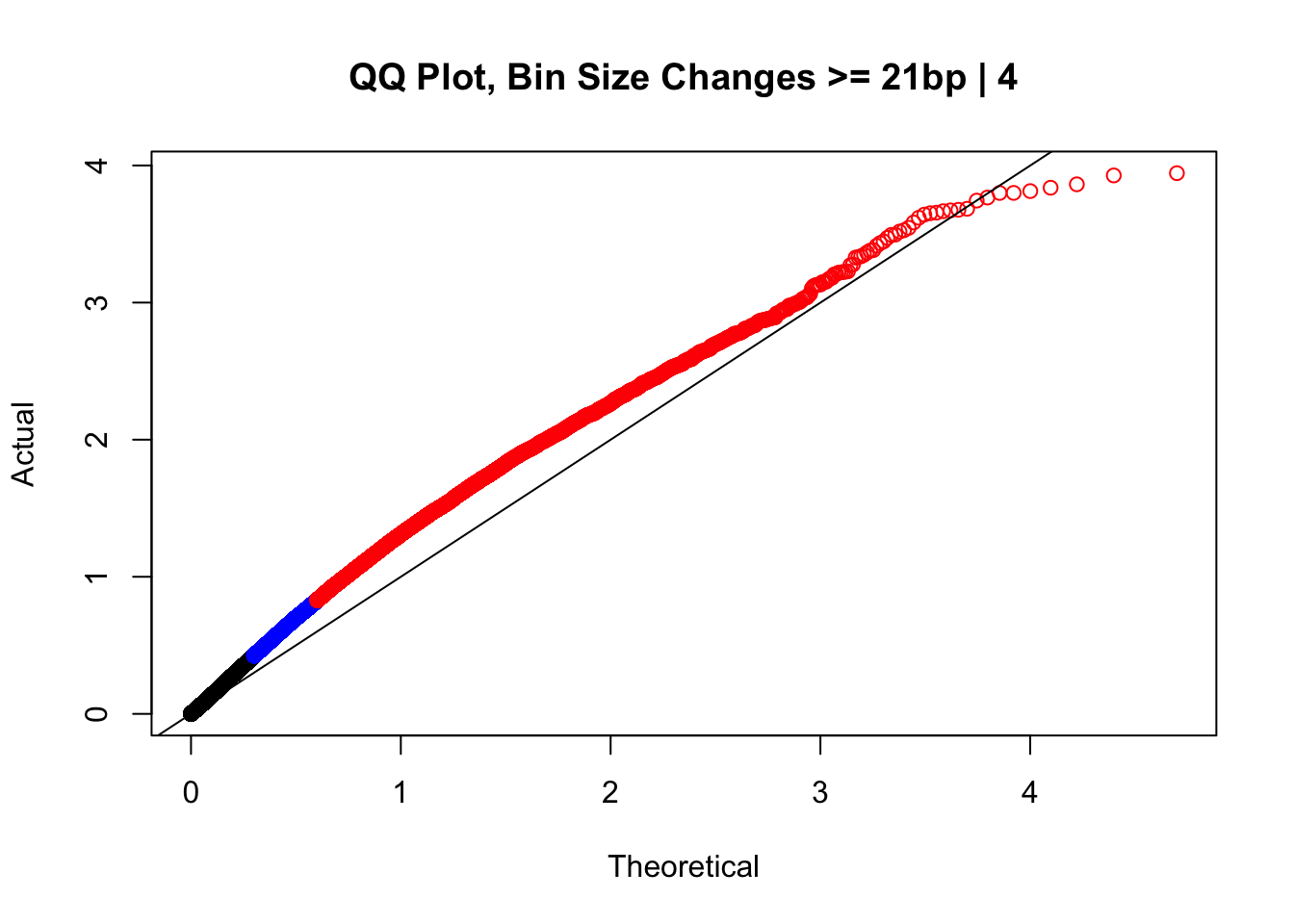

newqqplot(-log10(filter(data.4, H1diff>=21&H2diff>=21&C1diff>=21&C2diff>=21)$sp_pval), c(0.5, 0.75), "QQ Plot, Bin Size Changes >= 21bp | 4") #This doesn't look much different--what about the case where there are large differences in the size?

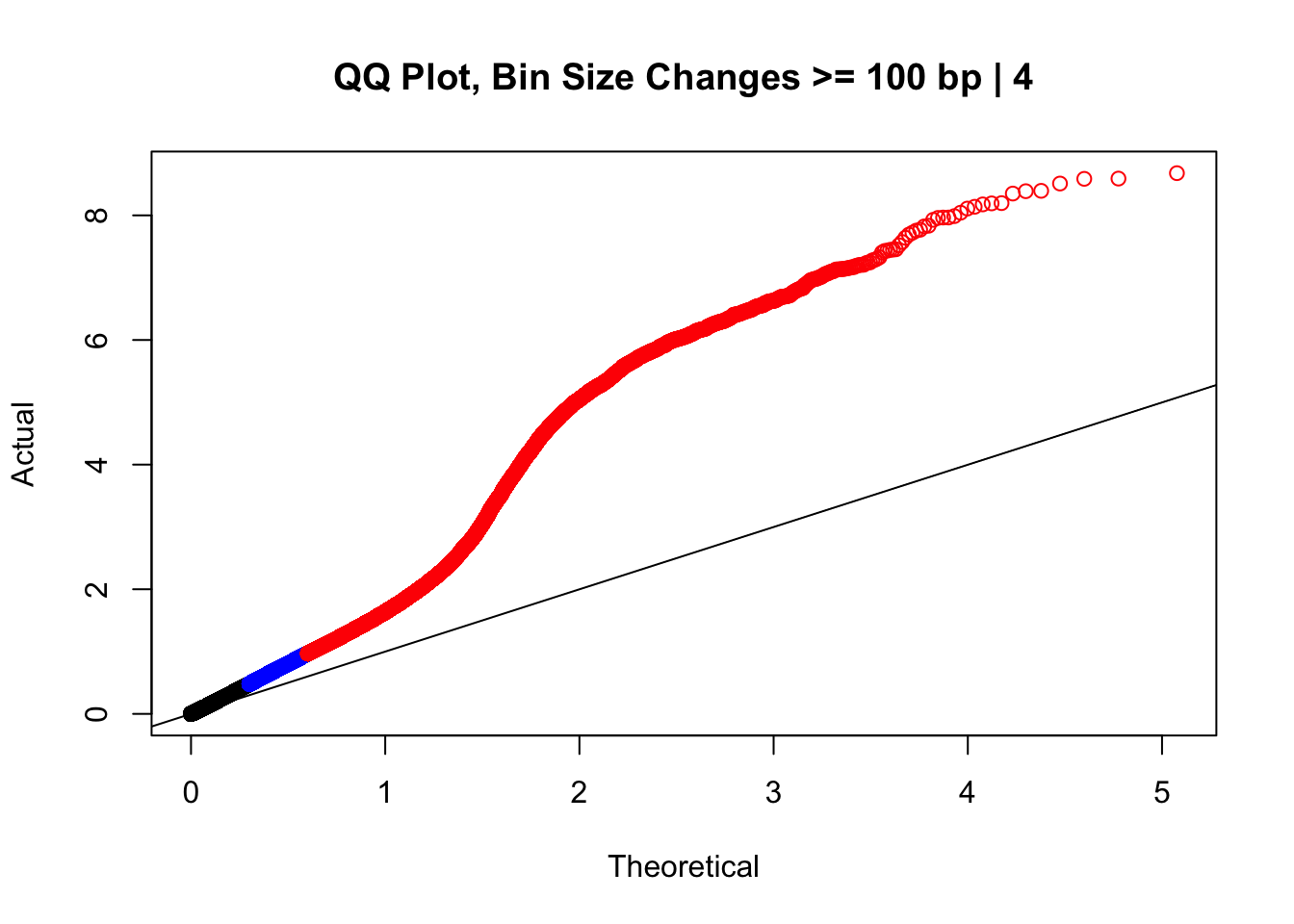

newqqplot(-log10(filter(data.4, H1diff>=100|H2diff>=100|C1diff>=100|C2diff>=100)$sp_pval), c(0.5, 0.75), "QQ Plot, Bin Size Changes >= 100 bp | 4") #It's hard to know what to make of this since here I am just allowing for any bin to have a size change greater than 100 bp. It looks fairly similar to the QQplot of the full data.

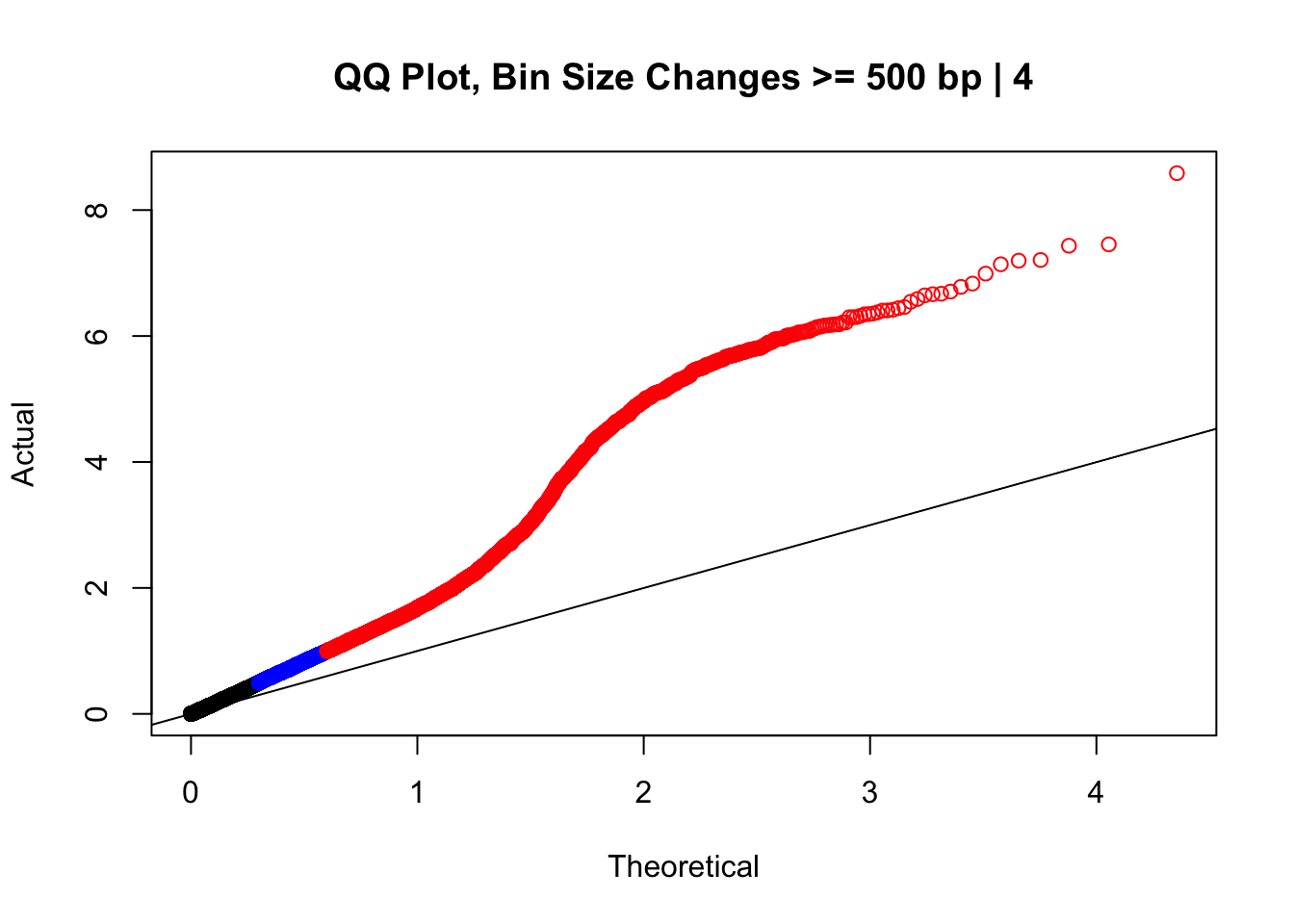

newqqplot(-log10(filter(data.4, H1diff>=500|H2diff>=500|C1diff>=500|C2diff>=500)$sp_pval), c(0.5, 0.75), "QQ Plot, Bin Size Changes >= 500 bp | 4") #It's hard to know what to make of this since here I am just allowing for any bin to have a size change greater than 500 bp.

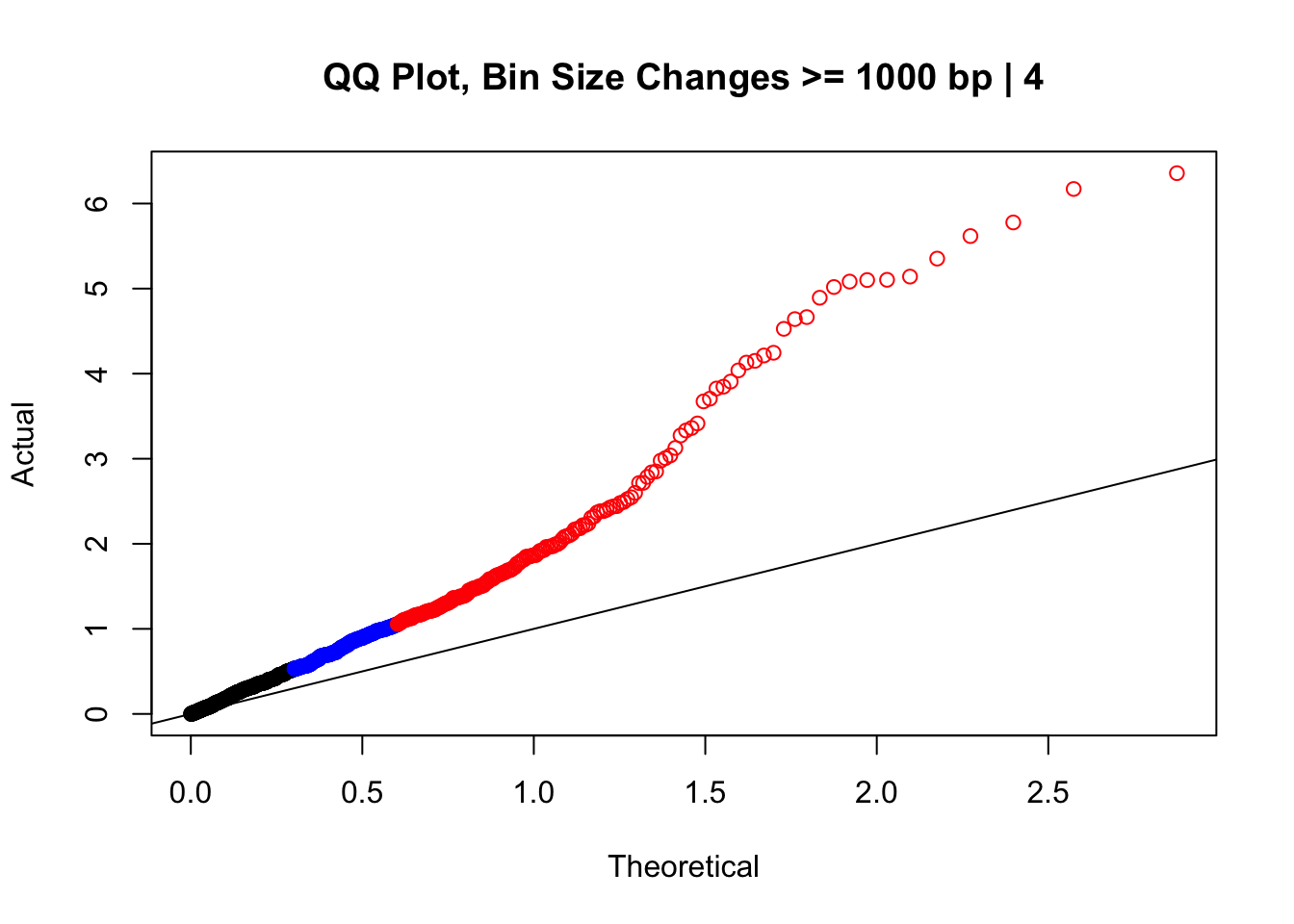

newqqplot(-log10(filter(data.4, H1diff>=1000|H2diff>=1000|C1diff>=1000|C2diff>=1000)$sp_pval), c(0.5, 0.75), "QQ Plot, Bin Size Changes >= 1000 bp | 4") #It's hard to know what to make of this since here I am just allowing for any bin to have a size change greater than 1kb.

#It may be hard to say anything super definitive about the bin size changes from these plots, but the fact that they all still show inflation, and I don't see any stark difference between bin size changes exceeding 100 bp, suggests to me that bin size is having a minimal effect here. Still, we will include it when looking at filtering criteria below for figuring out what hits might need to be removed still.

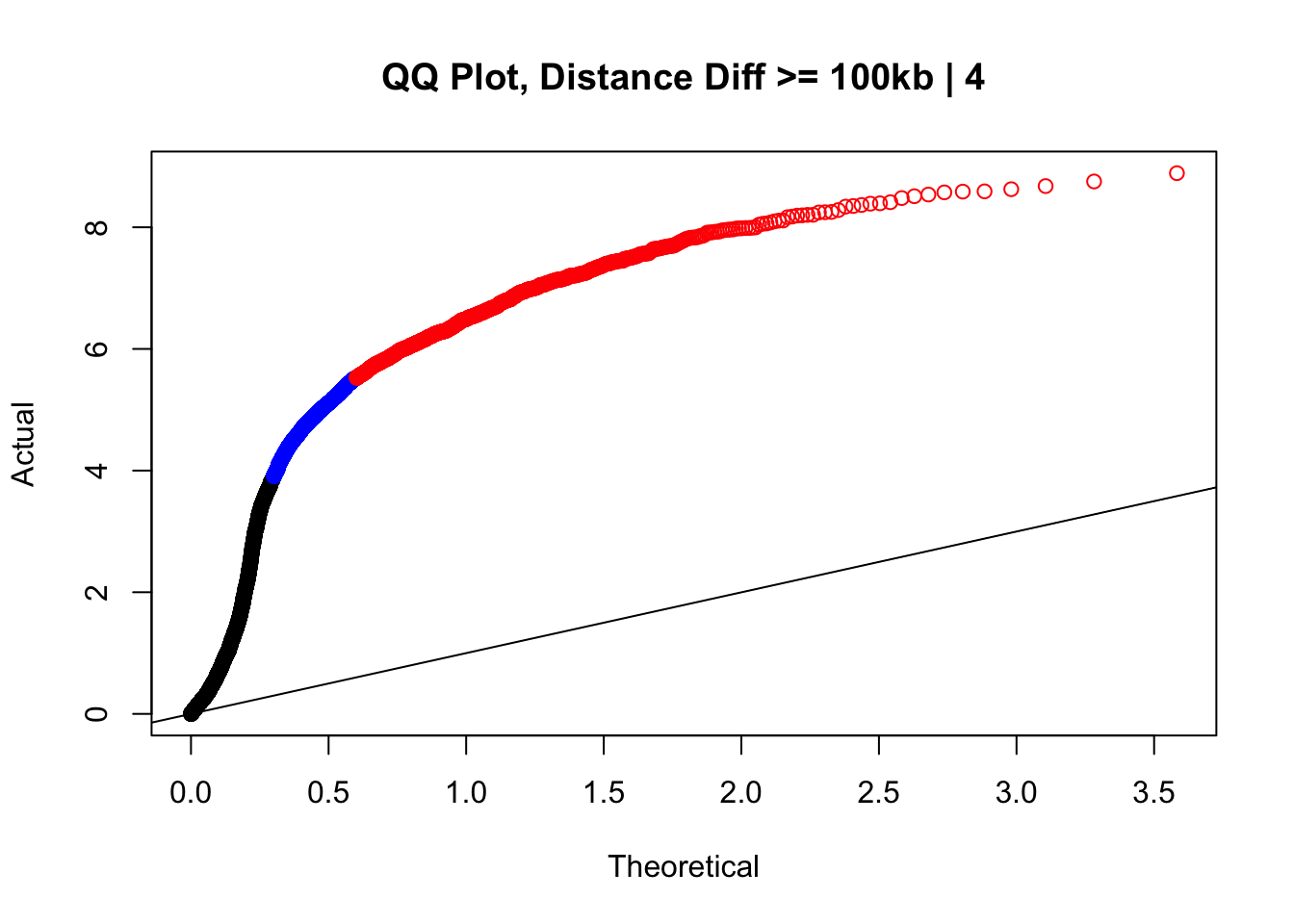

newqqplot(-log10(filter(data.4, dist_diff>=100000)$sp_pval), c(0.5, 0.75), "QQ Plot, Distance Diff >= 100kb | 4") #This is good to know--see EXTREMELY strong inflation amongst this class of p-values, perhaps giving another criteria for filtering

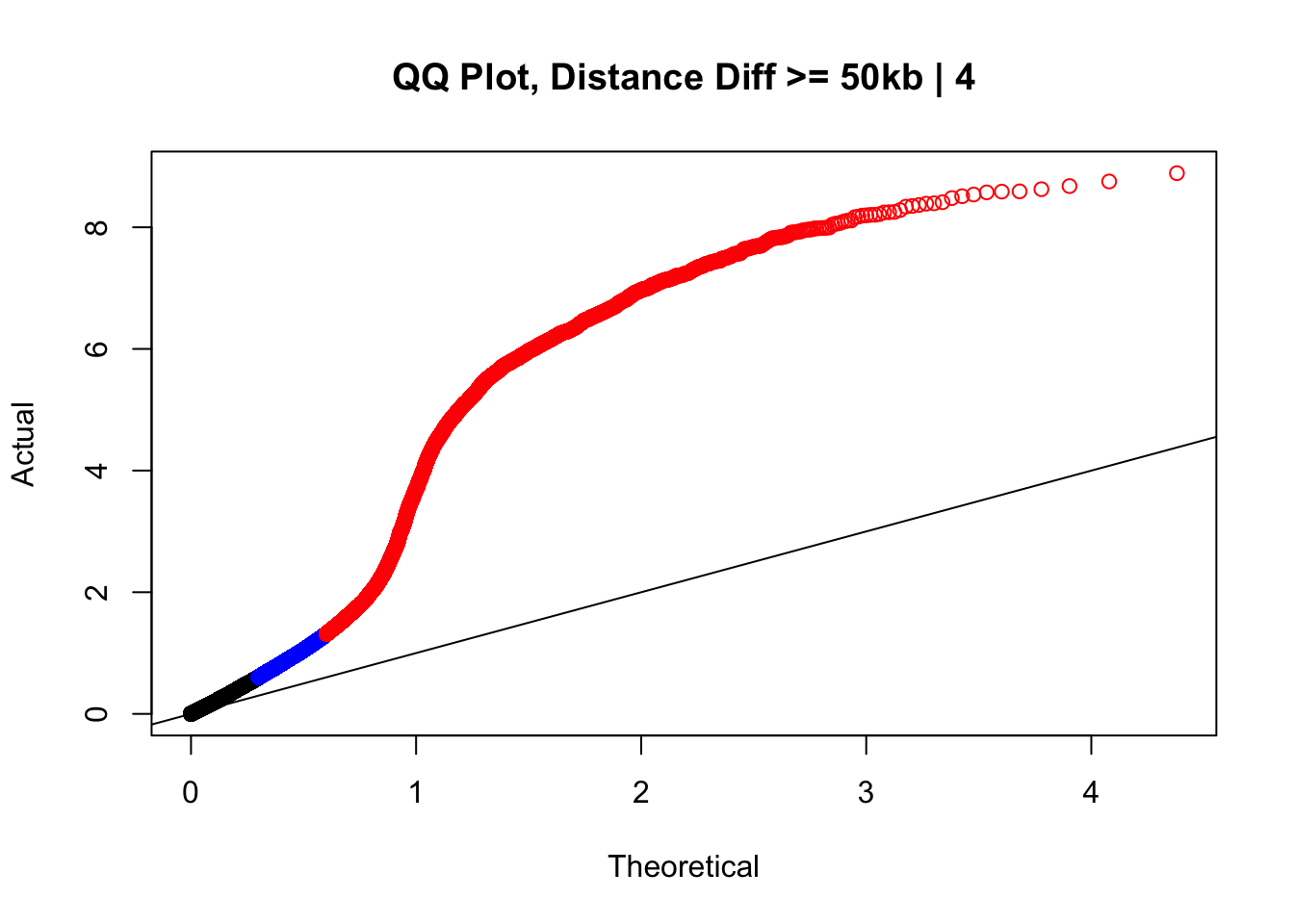

newqqplot(-log10(filter(data.4, dist_diff>=50000)$sp_pval), c(0.5, 0.75), "QQ Plot, Distance Diff >= 50kb | 4") #This is good to know--see that the inflation is a little weaker if we move towards including more pairs that have smaller distance differences between the species. What about pairs where there is no difference?

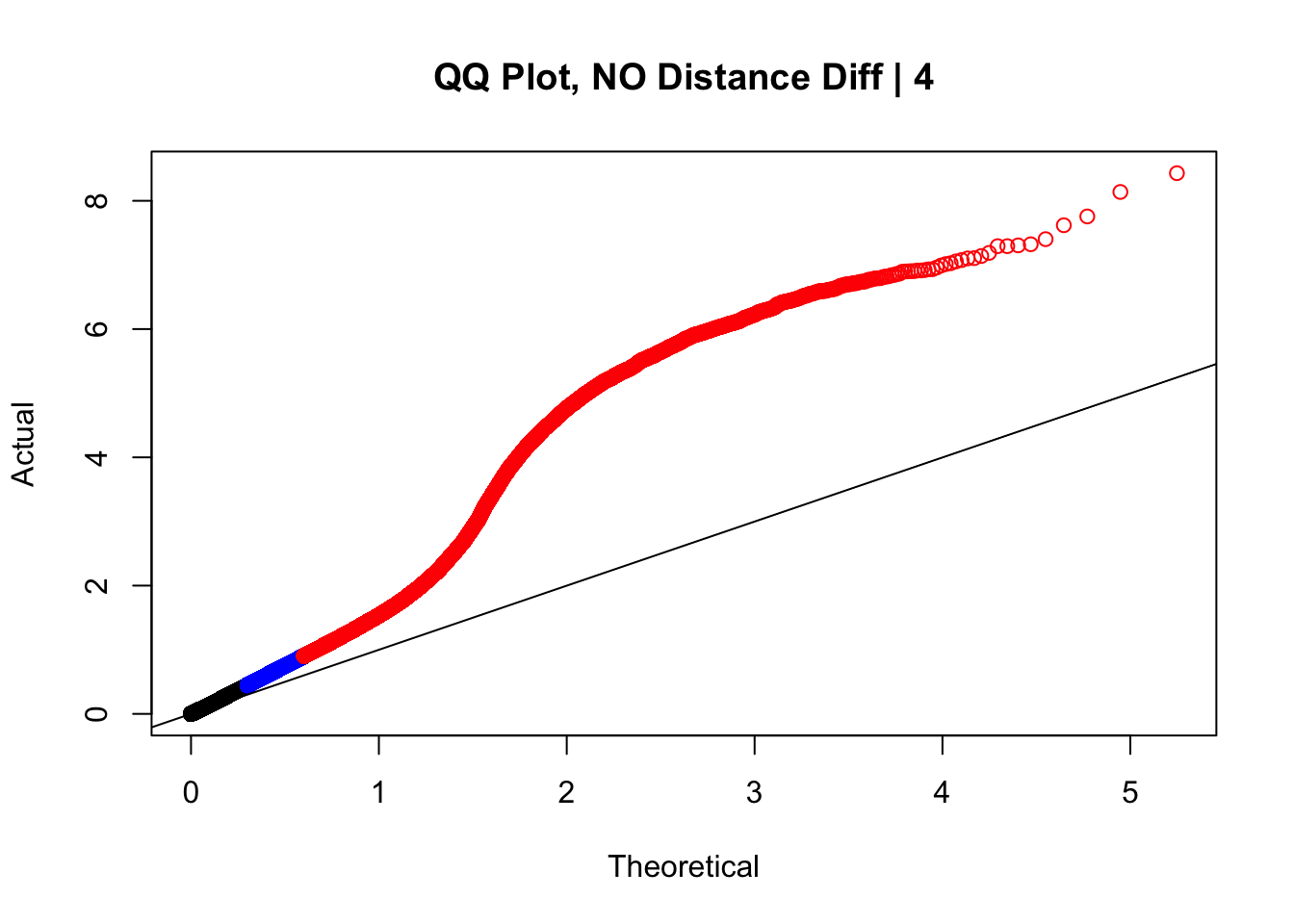

newqqplot(-log10(filter(data.4, dist_diff==0)$sp_pval), c(0.5, 0.75), "QQ Plot, NO Distance Diff | 4") #Values are still inflated, but the first solid 50% of the distribution stays along the normal line--this is great!

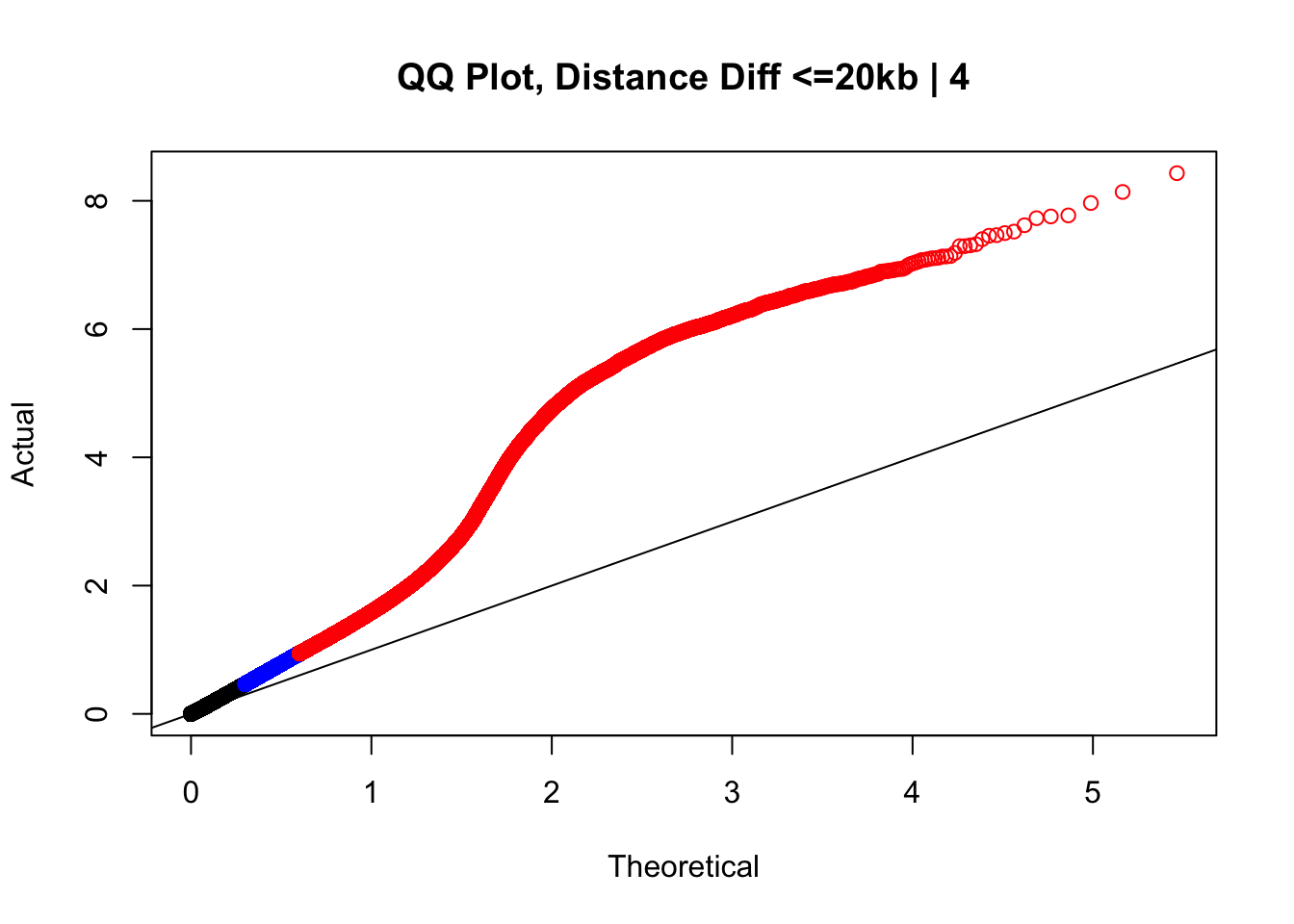

newqqplot(-log10(filter(data.4, dist_diff<=20000)$sp_pval), c(0.5, 0.75), "QQ Plot, Distance Diff <=20kb | 4") #And now we can see the trend in the opposite direction--SLIGHT inflation of the p-values when including more hits that have a larger distance difference.

#Based on the quantiles from before, the size differences have relatively little variation in their distribution. Hence I will take them and summarize them as a single value here:

sizediffs <- rowMeans(select(data.4, H1diff, H2diff, C1diff, C2diff), na.rm=TRUE)

quantile(sizediffs, probs=seq(0, 1, 0.2)) 0% 20% 40% 60% 80% 100%

0.00 13.50 23.25 41.50 108.50 970.50 #Now what I would like to do is find classes of hits--combinations between size and distance differences--that comprise roughly 10% of the data, or ~30k hits here. I will look at these classes in a 3-dimensional plot that includes size and distance differences as two of the axes, and the FDR from linear modeling on the 3rd. What I am in search of are classes of hits that show inflated FDR.

plot3d <- data.frame(bin_min=pmin(data.4$H1diff, data.4$H2diff, data.4$C1diff, data.4$C2diff, na.rm=TRUE), bin_max=pmax(data.4$H1diff, data.4$H2diff, data.4$C1diff, data.4$C2diff, na.rm=TRUE), sizediffs=sizediffs, dist_diff=data.4$dist_diff, FDR=data.4$sp_BH_pval)

#Of course, I already inherently know I will have a difficult time finding sets large enough with the bigger differences in distance, as only 10k or so of the hits even have a distance difference greater than 50kb. About half the hits have no distance difference, and of the remaining half, about half have size differences of 1-2 bins (10-20 kb), and half have greater size differences.

#First, I go in search of sets that will be large enough.

nrow(filter(plot3d, sizediffs<=13.5&dist_diff<10000)) #35k, a class of minimal changes--no distance difference, and minimal size difference.[1] 35260nrow(filter(plot3d, sizediffs<=23.33&sizediffs>13.5&dist_diff<10000)) #35k, still minimal changes[1] 35426nrow(filter(plot3d, sizediffs<=42.18750&sizediffs>23.33&dist_diff<10000)) #35k, minimal changes still, bin size getting up there[1] 35933nrow(filter(plot3d, sizediffs<=109.5&sizediffs>42.18750&dist_diff<10000)) #35k, minimal changes still, bin size even higher though[1] 35105nrow(filter(plot3d, sizediffs>109.5&dist_diff<10000)) #And there's another 35k! With big bin size changes.[1] 35283nrow(filter(plot3d, sizediffs<=13.5&dist_diff>=10000&dist_diff<=20000)) #23k, a class of minimal changes--no size difference, and minimal distance difference (1-2 bins off).[1] 22902nrow(filter(plot3d, sizediffs<=23.33&sizediffs>13.5&dist_diff>=10000&dist_diff<=20000)) #23k, small size difference, and minimal distance difference (1-2 bins off, 10-20 kb).[1] 23367nrow(filter(plot3d, sizediffs<=42.18750&sizediffs>23.33&dist_diff>=10000&dist_diff<=20000)) #23k, minimal changes still, bin size getting up there, and minimal distance difference (1-2 bins off, 10-20 kb).[1] 23082nrow(filter(plot3d, sizediffs<=109.5&sizediffs>42.18750&dist_diff>=10000&dist_diff<=20000)) #23k, minimal changes still, bin size even higher though, and minimal distance difference (1-2 bins off, 10-20 kb).[1] 22788nrow(filter(plot3d, sizediffs>109.5&dist_diff>=10000&dist_diff<=20000)) #And there's another 23k! With big bin size changes, and minimal distance difference (1-2 bins off, 10-20 kb).[1] 22924nrow(filter(plot3d, sizediffs<=13.5&dist_diff>20000)) #11k, a class of large changes--big distance difference, and minimal size difference.[1] 11638nrow(filter(plot3d, sizediffs<=23.33&sizediffs>13.5&dist_diff>20000)) #11k, a class of large changes--big distance difference, and relatively small size difference.[1] 11113nrow(filter(plot3d, sizediffs<=42.18750&sizediffs>23.33&dist_diff>20000)) #11k, a class of large changes--big distance difference, and median size difference.[1] 10793nrow(filter(plot3d, sizediffs<=109.5&sizediffs>42.18750&dist_diff>20000)) #11k, a class of large changes--big distance difference, and fairly large size difference.[1] 10949nrow(filter(plot3d, sizediffs>109.5&dist_diff>20000)) #11k, a class of the largest changes--big distance difference, and big size difference.[1] 10643#Now, create a data frame with these classes, their sizes, and the number of hits in each of the classes that is at FDR <= 0.05.

true3d <- data.frame(dist_class=c(rep("none", 5), rep("short", 5), rep("long", 5)), bin_quant=rep(1:5, 3), set_size=c(nrow(filter(plot3d, sizediffs<=13.5&dist_diff<10000)), nrow(filter(plot3d, sizediffs<=23.33&sizediffs>13.5&dist_diff<10000)), nrow(filter(plot3d, sizediffs<=42.18750&sizediffs>23.33&dist_diff<10000)), nrow(filter(plot3d, sizediffs<=109.5&sizediffs>42.18750&dist_diff<10000)), nrow(filter(plot3d, sizediffs>109.5&dist_diff<10000)), nrow(filter(plot3d, sizediffs<=13.5&dist_diff>=10000&dist_diff<=20000)), nrow(filter(plot3d, sizediffs<=23.33&sizediffs>13.5&dist_diff>=10000&dist_diff<=20000)), nrow(filter(plot3d, sizediffs<=42.18750&sizediffs>23.33&dist_diff>=10000&dist_diff<=20000)), nrow(filter(plot3d, sizediffs<=109.5&sizediffs>42.18750&dist_diff>=10000&dist_diff<=20000)), nrow(filter(plot3d, sizediffs>109.5&dist_diff>=10000&dist_diff<=20000)), nrow(filter(plot3d, sizediffs<=13.5&dist_diff>20000)), nrow(filter(plot3d, sizediffs<=23.33&sizediffs>13.5&dist_diff>20000)), nrow(filter(plot3d, sizediffs<=42.18750&sizediffs>23.33&dist_diff>20000)), nrow(filter(plot3d, sizediffs<=109.5&sizediffs>42.18750&dist_diff>20000)), nrow(filter(plot3d, sizediffs>109.5&dist_diff>20000))), num_sig=c(nrow(filter(plot3d, sizediffs<=13.5&dist_diff<10000&FDR<=0.05)), nrow(filter(plot3d, sizediffs<=23.33&sizediffs>13.5&dist_diff<10000&FDR<=0.05)), nrow(filter(plot3d, sizediffs<=42.18750&sizediffs>23.33&dist_diff<10000&FDR<=0.05)), nrow(filter(plot3d, sizediffs<=109.5&sizediffs>42.18750&dist_diff<10000&FDR<=0.05)), nrow(filter(plot3d, sizediffs>109.5&dist_diff<10000&FDR<=0.05)), nrow(filter(plot3d, sizediffs<=13.5&dist_diff>=10000&dist_diff<=20000&FDR<=0.05)), nrow(filter(plot3d, sizediffs<=23.33&sizediffs>13.5&dist_diff>=10000&dist_diff<=20000&FDR<=0.05)), nrow(filter(plot3d, sizediffs<=42.18750&sizediffs>23.33&dist_diff>=10000&dist_diff<=20000&FDR<=0.05)), nrow(filter(plot3d, sizediffs<=109.5&sizediffs>42.18750&dist_diff>=10000&dist_diff<=20000&FDR<=0.05)), nrow(filter(plot3d, sizediffs>109.5&dist_diff>=10000&dist_diff<=20000&FDR<=0.05)), nrow(filter(plot3d, sizediffs<=13.5&dist_diff>20000&FDR<=0.05)), nrow(filter(plot3d, sizediffs<=23.33&sizediffs>13.5&dist_diff>20000&FDR<=0.05)), nrow(filter(plot3d, sizediffs<=42.18750&sizediffs>23.33&dist_diff>20000&FDR<=0.05)), nrow(filter(plot3d, sizediffs<=109.5&sizediffs>42.18750&dist_diff>20000&FDR<=0.05)), nrow(filter(plot3d, sizediffs>109.5&dist_diff>20000&FDR<=0.05))))

true3d$prop <- true3d$num_sig/true3d$set_size #Get the proportion of these different sets that are significant (at or below 5% FDR)

#I will then take this data frame and export it to plot.ly's online interface, in order to make a 3D plot that will allow me to visualize FDR significance inflation in any of these sets (and subsequently filter them out). The 3D plot looks like this:

htmltools::includeHTML("/Users/ittaieres/Hi-C/analysis/QQQC.html")#Based on the 3D QQ quality control plot, the hit classes with larger distance-between-mates differences should be filtered out due to inflation of # hits significant @ 5% FDR. This gets rid of ~55k hits, or about a seventh of them. Essentially I am removing any hits that showed a difference in distance of greater than 20kb when lifting Over acros the species, to eliminate technical genomic differences that may drive signal in the species term:

highclass <- which(plot3d$dist_diff>20000)

data.filtered <- data.4[-highclass,] #Just removed high class since it has the starkest effect on proportion of hits significant @ 5% FDRNow I have obliterated any remaining concerns about the QQ plot inflation, and filtered out another class of Hi-C significant hits where species differences may have been driven by issues with liftOver between genomes.

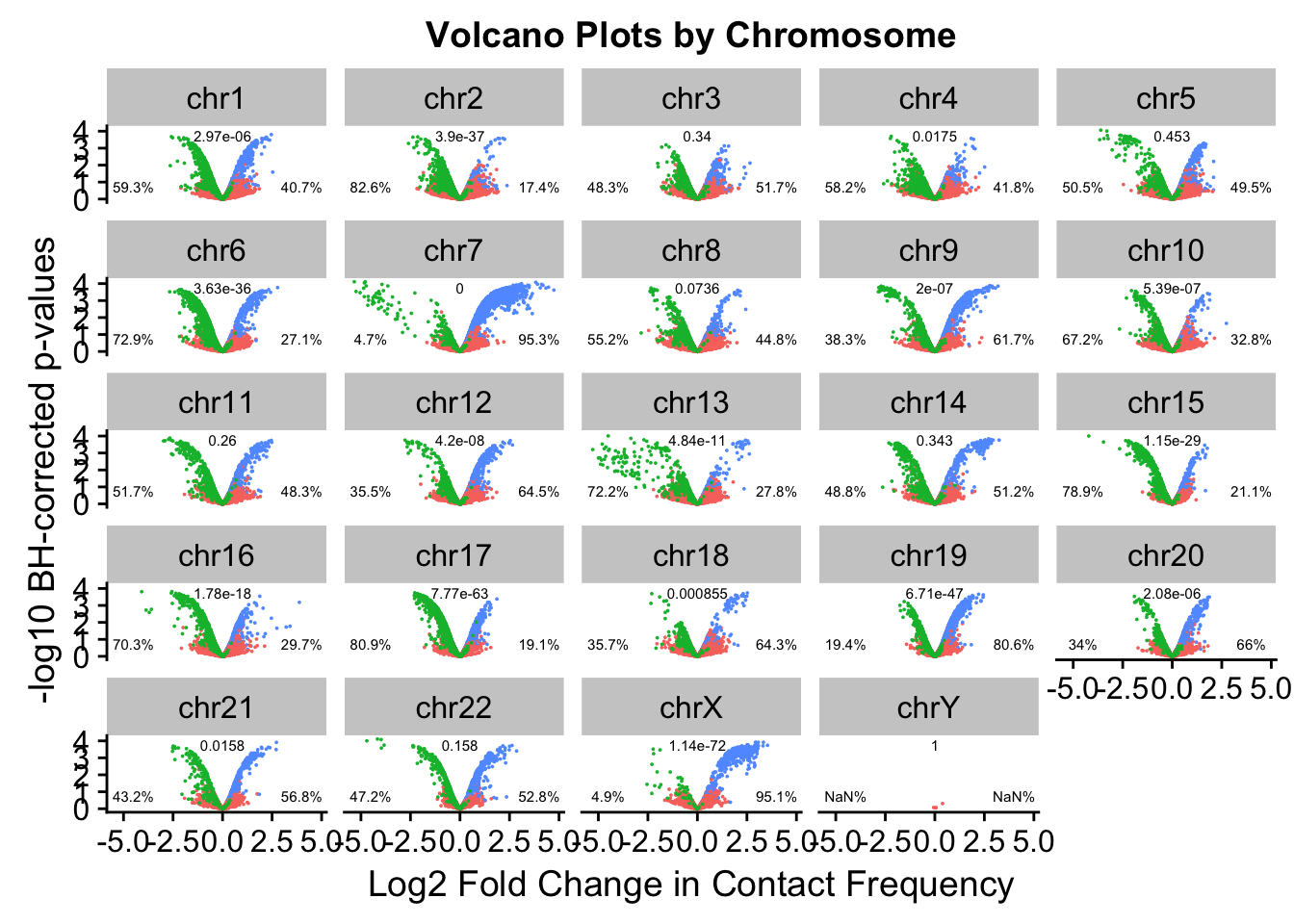

Volcano Plot Asymmetry

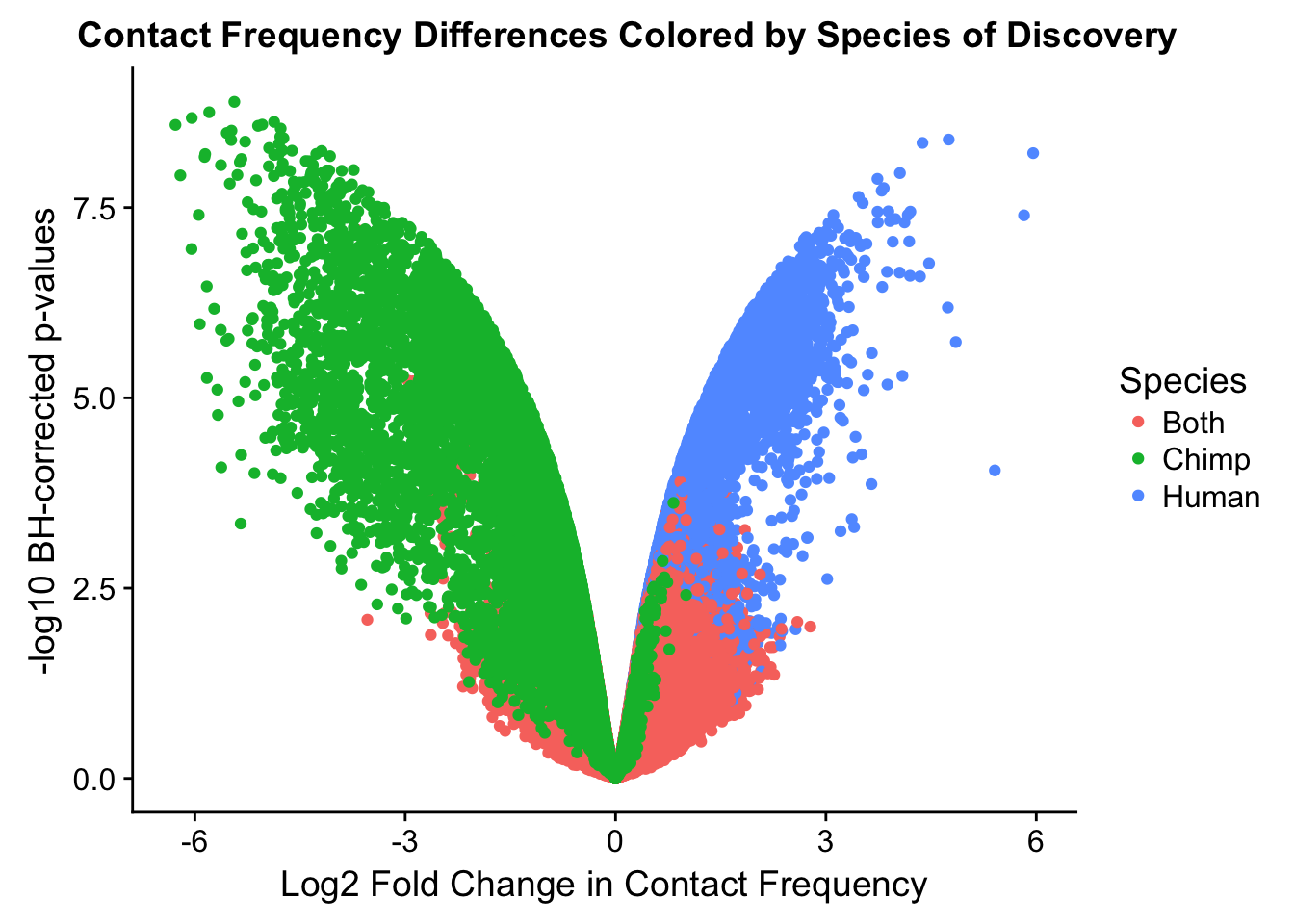

Now, I go to the asymmetry issue seen in the volcano plot on the linear modeling outputs. I first utilize the set of data conditioned upon discovery in 4 individuals, and break this information down on a chromosome-by-chromosome basis to see if there are particular chromosomes driving the asymmetry. Upon observation of chromosome-specific effects I quantify the extent of asymmetry on individual chromosomes using a null expectation of a binomial distribution with 50/50 probability of betas being positive or negative. I then repeat these analyses and visualize them on the final set of filtered data from above.

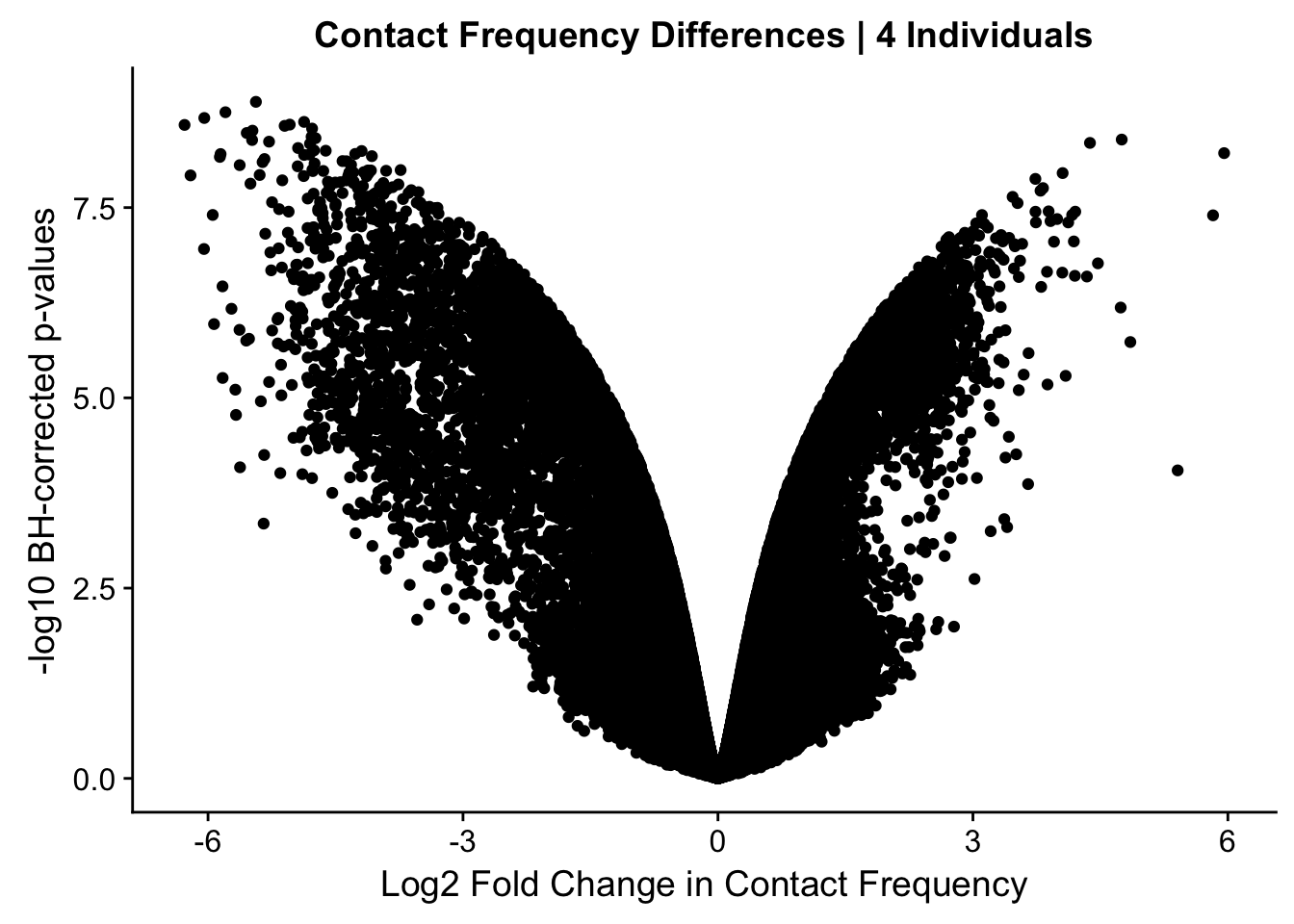

#Here I once again show the volcano plot for 4 individuals. Observe the stark asymmetry with a pile-up of hits on the left side as compared to the right.

volcplot.4 <- data.frame(pval=-log10(data.4$sp_pval), beta=data.4$sp_beta, species=data.4$disc_species, chr=data.4$Hchr)

ggplot(data=volcplot.4, aes(x=beta, y=pval)) + geom_point() + xlab("Log2 Fold Change in Contact Frequency") + ylab("-log10 BH-corrected p-values") + ggtitle("Contact Frequency Differences | 4 Individuals")

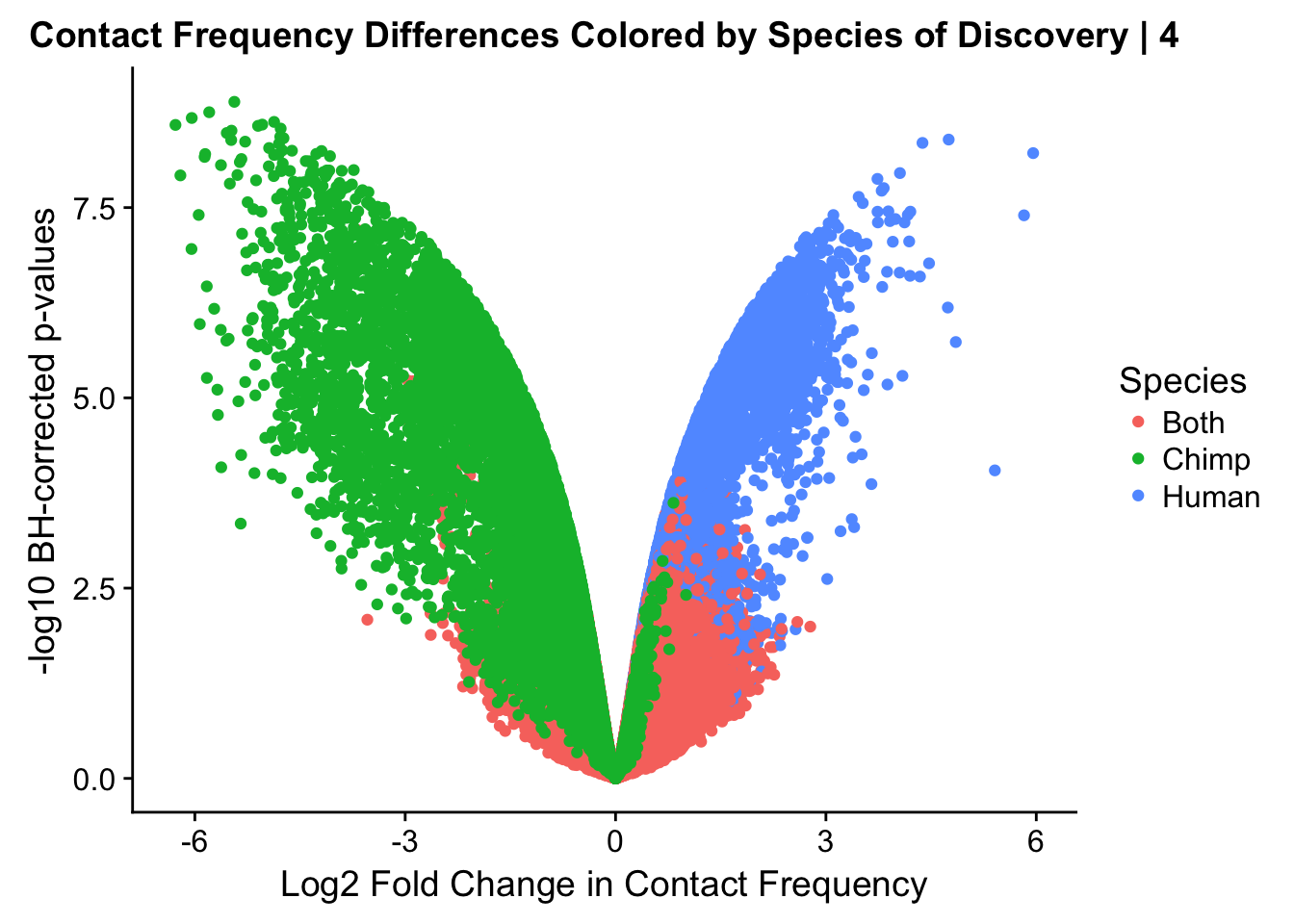

ggplot(data=volcplot.4, aes(x=beta, y=pval, color=species)) + geom_point() + xlab("Log2 Fold Change in Contact Frequency") + ylab("-log10 BH-corrected p-values") + ggtitle("Contact Frequency Differences Colored by Species of Discovery | 4") + scale_color_manual(name="Species", labels=c("Both", "Chimp", "Human"), values=c("#F8766D", "#00BA38", "#619CFF")) #Modify to add clearer labels

ggplot(data=volcplot.4, aes(x=beta, y=pval, color=species)) + geom_point() + xlab("Log2 Fold Change in Contact Frequency") + ylab("-log10 BH-corrected p-values") + ggtitle("Contact Frequency Differences Colored by Species of Discovery") + scale_color_manual(name="Species", labels=c("Both", "Chimp", "Human"), values=c("#F8766D", "#00BA38", "#619CFF")) + theme(plot.title=element_text(hjust=0.35)) #Modify to add clearer labels, hjust to adjust title location.

#As expected, we see that hits which produce a strong negative beta in the linear model (suggesting a marked decrease in contact frequency in humans as compared to chimps) are primarily discovered as significant by homer in chimpanzees. The inverse also holds true for human discoveries. This is reassuring, but still, why the asymmetry? Here I break this plot down on a chromosome-by-chromosome basis to see if this is being driven by individual chromosomes ore is an overall issue with the technique or its processing:

#First rearrange chrs to make a prettier plot that has chrs sequential:

levels(volcplot.4$chr) [1] "chr1" "chr10" "chr11" "chr12" "chr13" "chr14" "chr15" "chr16"

[9] "chr17" "chr18" "chr19" "chr2" "chr20" "chr21" "chr22" "chr3"

[17] "chr4" "chr5" "chr6" "chr7" "chr8" "chr9" "chrX" "chrY" volcplot.4$chr <- factor(volcplot.4$chr, levels(volcplot.4$chr)[c(1, 12, 16:22, 2:11, 13:15, 23:24)]) #Reorder factor levels!

levels(volcplot.4$chr) [1] "chr1" "chr2" "chr3" "chr4" "chr5" "chr6" "chr7" "chr8"

[9] "chr9" "chr10" "chr11" "chr12" "chr13" "chr14" "chr15" "chr16"

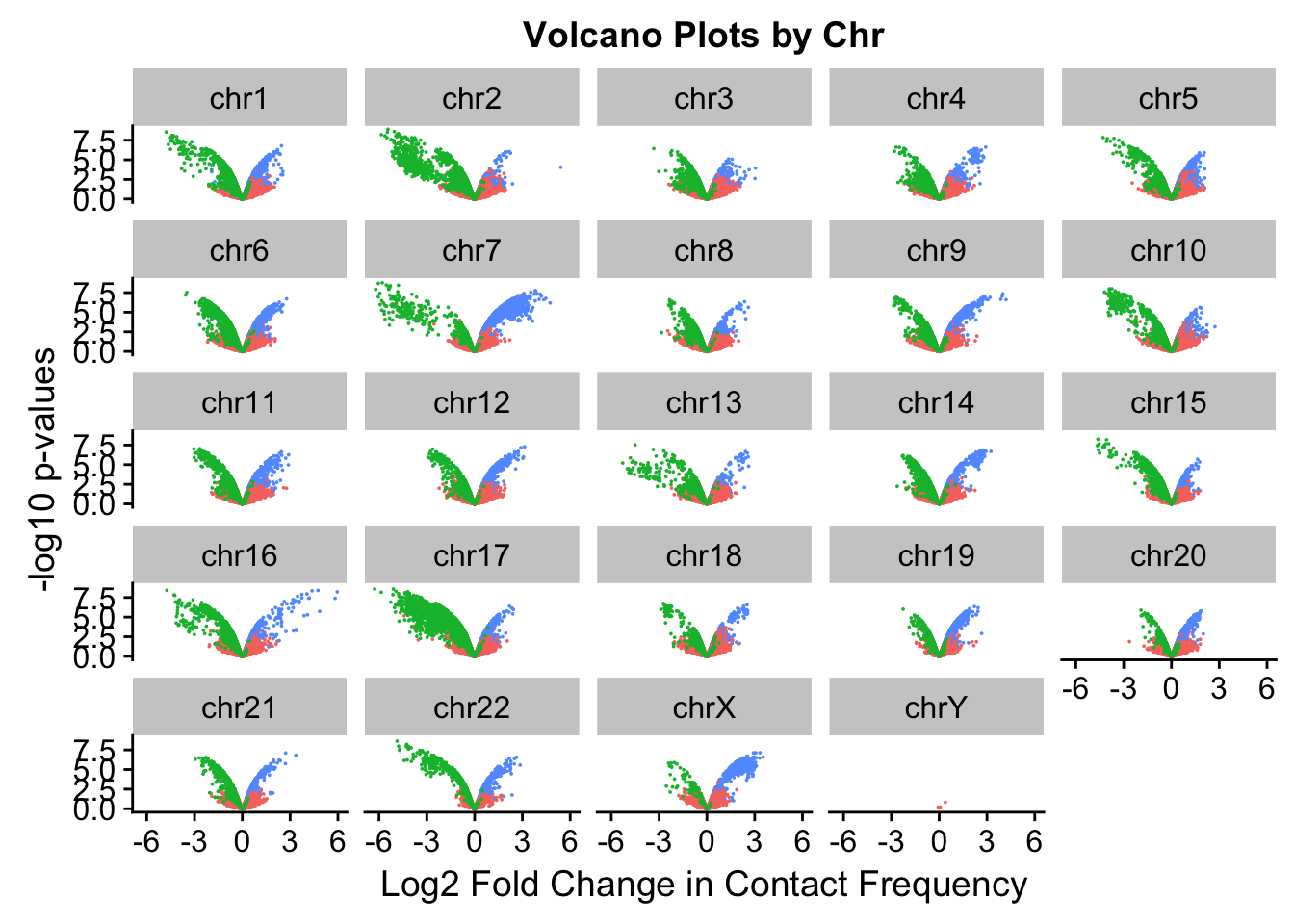

[17] "chr17" "chr18" "chr19" "chr20" "chr21" "chr22" "chrX" "chrY" ggplot(volcplot.4, aes(x=beta, y=pval, color=species)) + geom_point(size=0.001) + ggtitle("Volcano Plots by Chr") + facet_wrap(~as.factor(chr), nrow=5, ncol=5) + guides(color=FALSE) + ylab("-log10 p-values") + xlab("Log2 Fold Change in Contact Frequency")

#This is extremely interesting. It appears that the asymmetry seen is being driven primarily by only some of the chromosomes, and particularly ones where large-scale rearrangements have transpired between humans and chimps (e.g. chrs 2, 16, 17). This will warrant further investigation in another element of the analysis; for now, I am satisfied that the asymmetry is not an issue with the entire dataset but is confined to individual chromosomes.

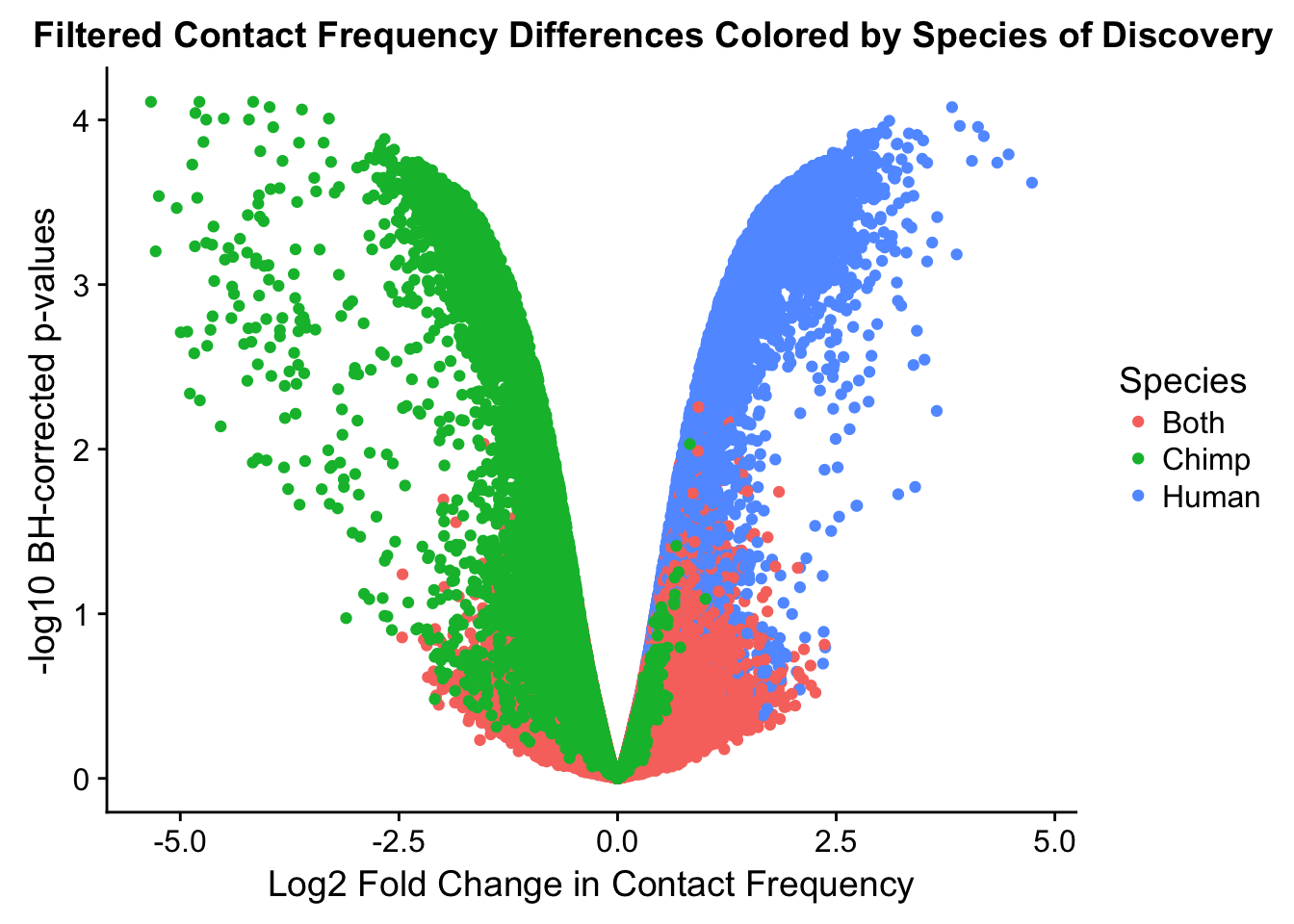

#Now, check this again, but on the data I have filtered accounting for potential genomic differences introduced by liftOver. From here on out all analyses will be run on this filtered set of data:

volcplot.filt <- data.frame(pval=-log10(data.filtered$sp_BH_pval), beta=data.filtered$sp_beta, species=data.filtered$disc_species, chr=data.filtered$Hchr)

ggplot(data=volcplot.filt, aes(x=beta, y=pval, color=species)) + geom_point() + xlab("Log2 Fold Change in Contact Frequency") + ylab("-log10 BH-corrected p-values") + ggtitle("Filtered Contact Frequency Differences Colored by Species of Discovery") + scale_color_manual(name="Species", labels=c("Both", "Chimp", "Human"), values=c("#F8766D", "#00BA38", "#619CFF")) + theme(plot.title=element_text(hjust=0.27)) #Modify to add clearer labels

#This volcano plot makes it look as though the asymmetry goes away entirely, with both sides of the volcano showing a visually similar number of hits (no longer AS stark of asymmetry).

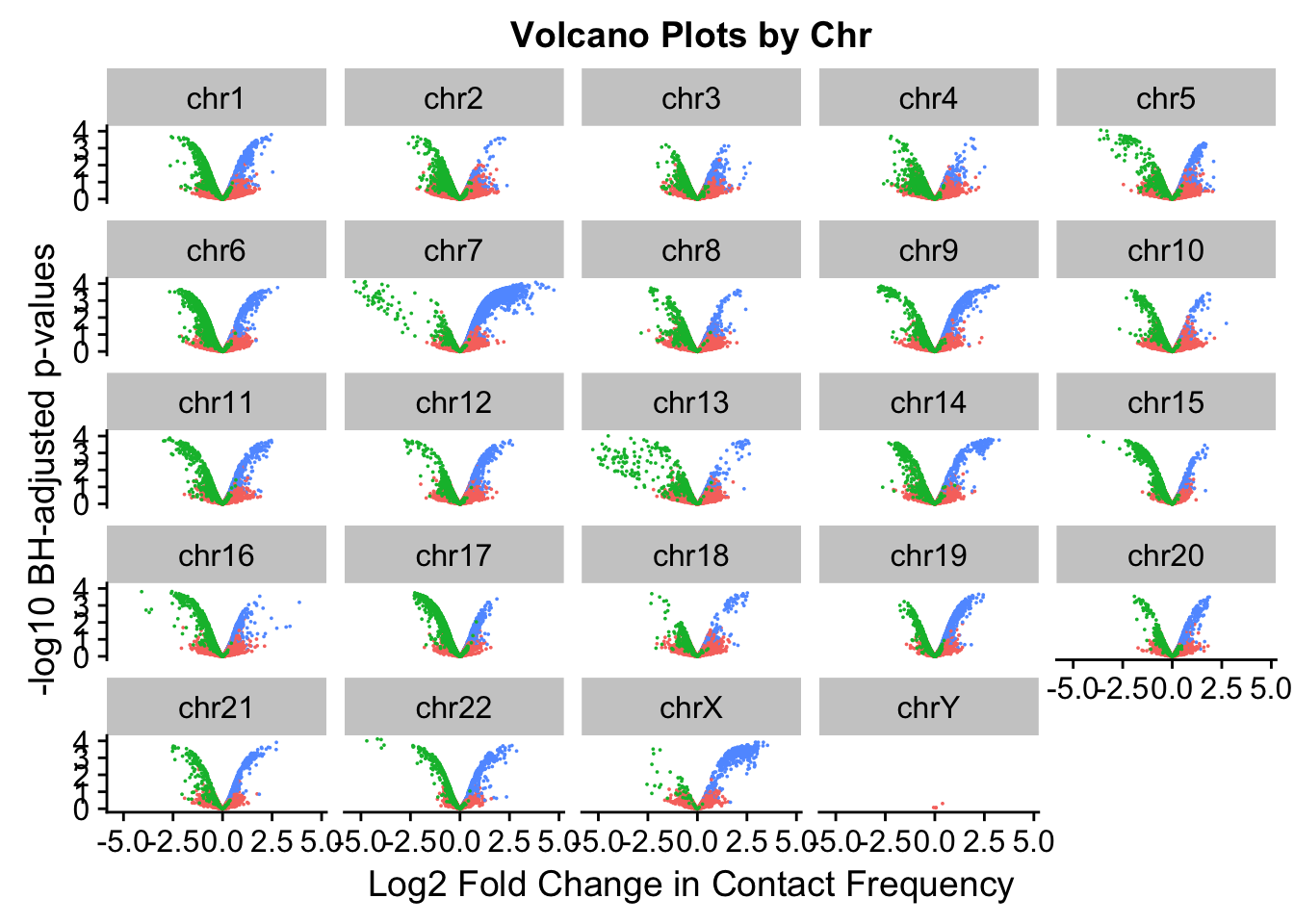

#If we break this down on a chromosome-by-chromosome basis:

levels(volcplot.filt$chr) [1] "chr1" "chr10" "chr11" "chr12" "chr13" "chr14" "chr15" "chr16"

[9] "chr17" "chr18" "chr19" "chr2" "chr20" "chr21" "chr22" "chr3"

[17] "chr4" "chr5" "chr6" "chr7" "chr8" "chr9" "chrX" "chrY" volcplot.filt$chr <- factor(volcplot.filt$chr, levels(volcplot.filt$chr)[c(1, 12, 16:22, 2:11, 13:15, 23:24)]) #Reorder factor levels!

levels(volcplot.filt$chr) [1] "chr1" "chr2" "chr3" "chr4" "chr5" "chr6" "chr7" "chr8"

[9] "chr9" "chr10" "chr11" "chr12" "chr13" "chr14" "chr15" "chr16"

[17] "chr17" "chr18" "chr19" "chr20" "chr21" "chr22" "chrX" "chrY" ggplot(volcplot.filt, aes(x=beta, y=pval, color=species)) + geom_point(size=0.001) + ggtitle("Volcano Plots by Chr") + facet_wrap(~as.factor(chr), nrow=5, ncol=5) + guides(color=FALSE) + ylab("-log10 BH-adjusted p-values") + xlab("Log2 Fold Change in Contact Frequency")

#We can still see that there are certain chromosomes with a lot of asymmetry to them in terms of distribution of significant betas for the linear modeling--chimps still seem to dominate chromosomes 2, 15-17, and some others.

#Now try to quantify the extent of asymmetry in the chromosomes, with a particular focus on hits that are statistically significant.

asym.stats <- data.frame(chr=unique(data.filtered$Hchr), binom.p=rep(NA, 24), prop.h=rep(NA, 24), prop.c=rep(NA, 24))

rownames(asym.stats) <- asym.stats$chr

for(chromo in unique(data.filtered$Hchr)){

mydat <- filter(data.filtered, Hchr==chromo, sp_BH_pval<=0.05) #Iterate through chromosomes

side <- ifelse(mydat$sp_beta<0, 0, 1) #Assign sides of the beta dist'n to the betas

asym.obs <- min(sum(side==1), sum(side==0)) #Find which side of the beta dist'n has less points, so I can use pbinom on default w/ lower.tail to find probability of observing a result as or MORE asymmetric than this one.

asym.stats[chromo, 2] <- pbinom(asym.obs, length(side), 0.5) #Find that probability with the assumption of 50/50 chance of landing on either side.

asym.stats[chromo, 3] <- sum(side==1)/length(side) #Find the proportion of the hits that fall on the human side of the distribution (positive, indicating increased contact frequency here in humans as compared to chimps)

asym.stats[chromo, 4] <- sum(side==0)/length(side) #Same thing as above, but for chimps this time.

}

#Now we can look at the actual results to quantify how asymmetric the significant hits are from each chromosome:

asym.stats chr binom.p prop.h prop.c

chr10 chr10 5.385967e-07 0.3284314 0.67156863

chr12 chr12 4.198505e-08 0.6445087 0.35549133

chr13 chr13 4.842465e-11 0.2775120 0.72248804

chr14 chr14 3.425391e-01 0.5115681 0.48843188

chr15 chr15 1.145818e-29 0.2111111 0.78888889

chr16 chr16 1.778800e-18 0.2971175 0.70288248

chr17 chr17 7.770944e-63 0.1908957 0.80910426

chr18 chr18 8.547464e-04 0.6428571 0.35714286

chr19 chr19 6.708114e-47 0.8058252 0.19417476

chr1 chr1 2.966482e-06 0.4072848 0.59271523

chr20 chr20 2.084187e-06 0.6602871 0.33971292

chr21 chr21 1.584002e-02 0.5676692 0.43233083

chr22 chr22 1.576454e-01 0.5279330 0.47206704

chr11 chr11 2.604538e-01 0.4829268 0.51707317

chr2 chr2 3.902883e-37 0.1737892 0.82621083

chr3 chr3 3.398324e-01 0.5165877 0.48341232

chr4 chr4 1.751408e-02 0.4180791 0.58192090

chr5 chr5 4.525288e-01 0.4946619 0.50533808

chr6 chr6 3.627575e-36 0.2710927 0.72890733

chr7 chr7 0.000000e+00 0.9532921 0.04670793

chr8 chr8 7.356143e-02 0.4476190 0.55238095

chr9 chr9 1.996949e-07 0.6168421 0.38315789

chrX chrX 1.139666e-72 0.9512195 0.04878049

chrY chrY 1.000000e+00 NaN NaN#ChrY did not have any significant hits, so get a p-value of 1. Chr7 is so asymmetric it is impossible to calculate, while chromosomes 1, 2, 6, 9, 11, 15, 16, 17, and X all showed asymmetry in direction of effects with extreme statistical significance.

#Now, make volcano plots by chromosome again, this time labeling each chromosome with its binomial p-value and the percentage of significant hits showing stronger contact frequencies in each species on either side of the distribution.

ggplot(data=volcplot.filt, aes(x=beta, y=pval, color=species)) + geom_point(size=0.001) + ggtitle("Volcano Plots by Chromosome") + facet_wrap(~chr, nrow=5, ncol=5) + guides(color=FALSE) + ylab("-log10 BH-corrected p-values") + xlab("Log2 Fold Change in Contact Frequency") + geom_text(data=asym.stats, aes(x=-4.5, y=0.75, label=paste(round(prop.c*100, digits=1), "%", sep=""), color=NULL), show.legend=FALSE, size=2) + geom_text(data=asym.stats, aes(x=4, y=0.75, label=paste(round(prop.h*100, digits=1), "%", sep=""), color=NULL), show.legend=FALSE, size=2) + geom_text(data=asym.stats, aes(x=0, y=3.75, label=signif(binom.p, digits=3), color=NULL), show.legend=FALSE, size=2)

#ggsave('~/Desktop/volcchr.jpg', device="jpeg", antialias="none") This was an earlier attempt at clearing up some image resolution blurriness with the text.

#ggsave('~/Desktop/volcchr2.jpg', device="jpeg", dpi=5000) #Works well!

ggsave("~/Desktop/volcchr2.jpg", device="jpg", dpi=200)Saving 7 x 5 in image#Write out the data with the new columns added on!

fwrite(full.data, "~/Desktop/Hi-C/full.data.10.2018", quote = TRUE, sep = "\t", row.names = FALSE, col.names = TRUE, na="NA", showProgress = FALSE)

fwrite(data.4, "~/Desktop/Hi-C/data.4.10.2018", quote=FALSE, sep="\t", row.names=FALSE, col.names=TRUE, na="NA", showProgress = FALSE)

fwrite(data.filtered, "~/Desktop/Hi-C/data.4.filtered.txt", quote=FALSE, sep="\t", row.names=FALSE, col.names=TRUE, na="NA", showProgress = FALSE)From this analysis we have dealt with some quality control issues, and filtered down the data to a final set of biologically significant Hi-C interaction frequencies, many of which appear species-specific. There are clearly strong differences between the species that make their 3D regulatory landscapes divergent. Now, I move to orthogonal gene expression analyses.

Session information

sessionInfo()R version 3.4.0 (2017-04-21)

Platform: x86_64-apple-darwin15.6.0 (64-bit)

Running under: OS X El Capitan 10.11.6

Matrix products: default

BLAS: /Library/Frameworks/R.framework/Versions/3.4/Resources/lib/libRblas.0.dylib

LAPACK: /Library/Frameworks/R.framework/Versions/3.4/Resources/lib/libRlapack.dylib

locale:

[1] en_US.UTF-8/en_US.UTF-8/en_US.UTF-8/C/en_US.UTF-8/en_US.UTF-8

attached base packages:

[1] compiler stats graphics grDevices utils datasets methods

[8] base

other attached packages:

[1] bindrcpp_0.2 bedr_1.0.4 forcats_0.3.0

[4] purrr_0.2.4 readr_1.1.1 tibble_1.4.2

[7] tidyverse_1.2.1 edgeR_3.20.9 RColorBrewer_1.1-2

[10] heatmaply_0.14.1 viridis_0.5.0 viridisLite_0.3.0

[13] stringr_1.3.0 gplots_3.0.1 Hmisc_4.1-1

[16] Formula_1.2-2 survival_2.41-3 lattice_0.20-35

[19] dplyr_0.7.4 plotly_4.7.1 cowplot_0.9.3

[22] ggplot2_2.2.1 reshape2_1.4.3 data.table_1.10.4-3

[25] tidyr_0.8.0 plyr_1.8.4 limma_3.34.9

loaded via a namespace (and not attached):

[1] colorspace_1.3-2 class_7.3-14 modeltools_0.2-21

[4] mclust_5.4 rprojroot_1.3-2 htmlTable_1.11.2

[7] futile.logger_1.4.3 base64enc_0.1-3 rstudioapi_0.7

[10] flexmix_2.3-14 mvtnorm_1.0-7 lubridate_1.7.3

[13] xml2_1.2.0 R.methodsS3_1.7.1 codetools_0.2-15

[16] splines_3.4.0 mnormt_1.5-5 robustbase_0.92-8

[19] knitr_1.20 jsonlite_1.5 broom_0.4.3

[22] cluster_2.0.6 kernlab_0.9-25 R.oo_1.21.0

[25] httr_1.3.1 backports_1.1.2 assertthat_0.2.0

[28] Matrix_1.2-12 lazyeval_0.2.1 cli_1.0.0

[31] acepack_1.4.1 htmltools_0.3.6 tools_3.4.0

[34] gtable_0.2.0 glue_1.2.0 Rcpp_0.12.16

[37] cellranger_1.1.0 trimcluster_0.1-2 gdata_2.18.0

[40] nlme_3.1-131.1 iterators_1.0.9 fpc_2.1-11

[43] psych_1.7.8 testthat_2.0.0 rvest_0.3.2

[46] gtools_3.5.0 dendextend_1.7.0 DEoptimR_1.0-8

[49] MASS_7.3-49 scales_0.5.0 TSP_1.1-5

[52] hms_0.4.2 parallel_3.4.0 lambda.r_1.2

[55] yaml_2.1.18 gridExtra_2.3 rpart_4.1-13

[58] latticeExtra_0.6-28 stringi_1.1.7 gclus_1.3.1

[61] foreach_1.4.4 checkmate_1.8.5 seriation_1.2-3

[64] caTools_1.17.1 rlang_0.2.0 pkgconfig_2.0.1

[67] prabclus_2.2-6 bitops_1.0-6 evaluate_0.10.1

[70] bindr_0.1.1 labeling_0.3 htmlwidgets_1.0

[73] magrittr_1.5 R6_2.2.2 pillar_1.2.1

[76] haven_1.1.1 whisker_0.3-2 foreign_0.8-69

[79] nnet_7.3-12 modelr_0.1.1 crayon_1.3.4

[82] futile.options_1.0.0 KernSmooth_2.23-15 rmarkdown_1.9

[85] locfit_1.5-9.1 grid_3.4.0 readxl_1.0.0

[88] git2r_0.21.0 digest_0.6.15 diptest_0.75-7

[91] webshot_0.5.0 VennDiagram_1.6.19 R.utils_2.6.0

[94] stats4_3.4.0 munsell_0.4.3 registry_0.5 This R Markdown site was created with workflowr